I Was Kicked From My AI Memory. Here’s What I Did With 6 Months of Voice Data

After Meta acquired Limitless, EU users were banned. I exported 6 months of conversations, deleted my account, and built something better with Claude Code.

The Message That Changed Everything

“Limitless service is no longer available in your region. You have until December 19, 2025 to download your data.”

That’s how my 6-month relationship with the Limitless Pendant ended. Not with a goodbye, but with a 14-day countdown to delete everything I’d trusted to their cloud.

After Meta’s acquisition in December 2025, Limitless withdrew from the EU, UK, Israel, South Korea, Turkey, and Brazil. No opt-in for existing users. No grandfather clause. Just: export your data and goodbye.

I had 10GB of voice recordings. Six months of meetings, random thoughts, conversations with friends, and late-night ideas I’d trusted to an AI that promised to “remember everything.”

This is the story of what I did with that data, why Limitless failed to deliver on its AI memory promise, and what I learned about the future of personal AI.

Why Limitless Failed Its Promise (It’s Not Just Meta)

Before the acquisition, I was already frustrated. The promise was simple: an AI that remembers everything you say and helps you recall it later.

The reality? Three fundamental problems.

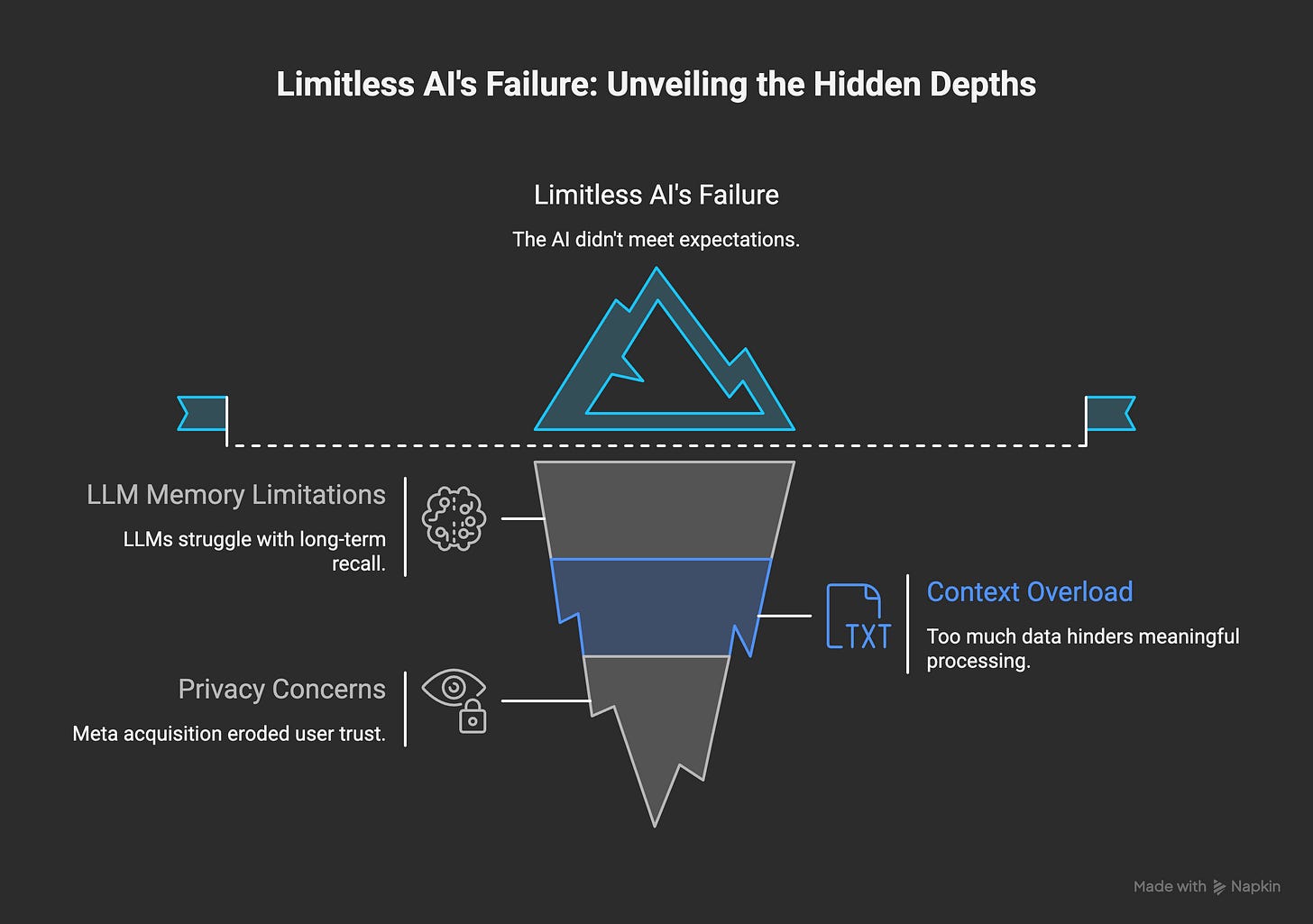

Problem 1: LLMs Can’t Actually Remember

Here’s the dirty secret of AI memory: large language models have quadratic computational complexity. Doubling the context window requires roughly four times the computation.

Even with Gemini’s 1-million token window, research shows LLMs don’t “robustly make use of information in long input contexts”. Models perform best when relevant information is at the beginning or end—they literally forget the middle.

My 6 months of conversations? Mostly middle.

The Limitless AI couldn’t actually surface insights from three months ago. When I asked “what did I discuss with my team in September about the project deadline?”, it either hallucinated or said it couldn’t find anything. The “AI memory” was more like a fancy transcription service with a mediocre search.

Problem 2: Too Much Context, Not Enough Signal

An agent limited to 128k tokens suffers from “catastrophic forgetting” as conversations pile up.

My 10GB of voice data wasn’t 10GB of insights. It was:

30% background noise

40% small talk (”let me share my screen”)

20% context that’s only useful in the moment

10% actually valuable information

But Limitless treated it all the same. No priority. No filtering. Just a giant pile of transcripts that the AI couldn’t meaningfully process.

Problem 3: Privacy Theater

Limitless marketed themselves as privacy-focused. HIPAA compliant. Your data stays yours.

Then Meta bought them.

Within days, users were furious:

“Assume I’m not the only one looking at this pendant on my desk like it’s a wiretap.”

“First reaction of many users was to ask for a refund.”

“There’s an escape hatch for anyone trying to de-Zuckerberg their setup.”

Remaining users had to accept new Meta-aligned Privacy Policy and Terms of Service. The “privacy-first” company handed 6 months of my intimate conversations to Facebook’s parent company.

Why AI Wearables Aren’t Ready (A Contrarian Take)

CES 2026 was full of always-on AI wearables. SwitchBot’s “second brain” that records everything. Lenovo’s pendant. At least a dozen companies pushing the same concept.

I wore one for 6 months. Here’s what nobody talks about:

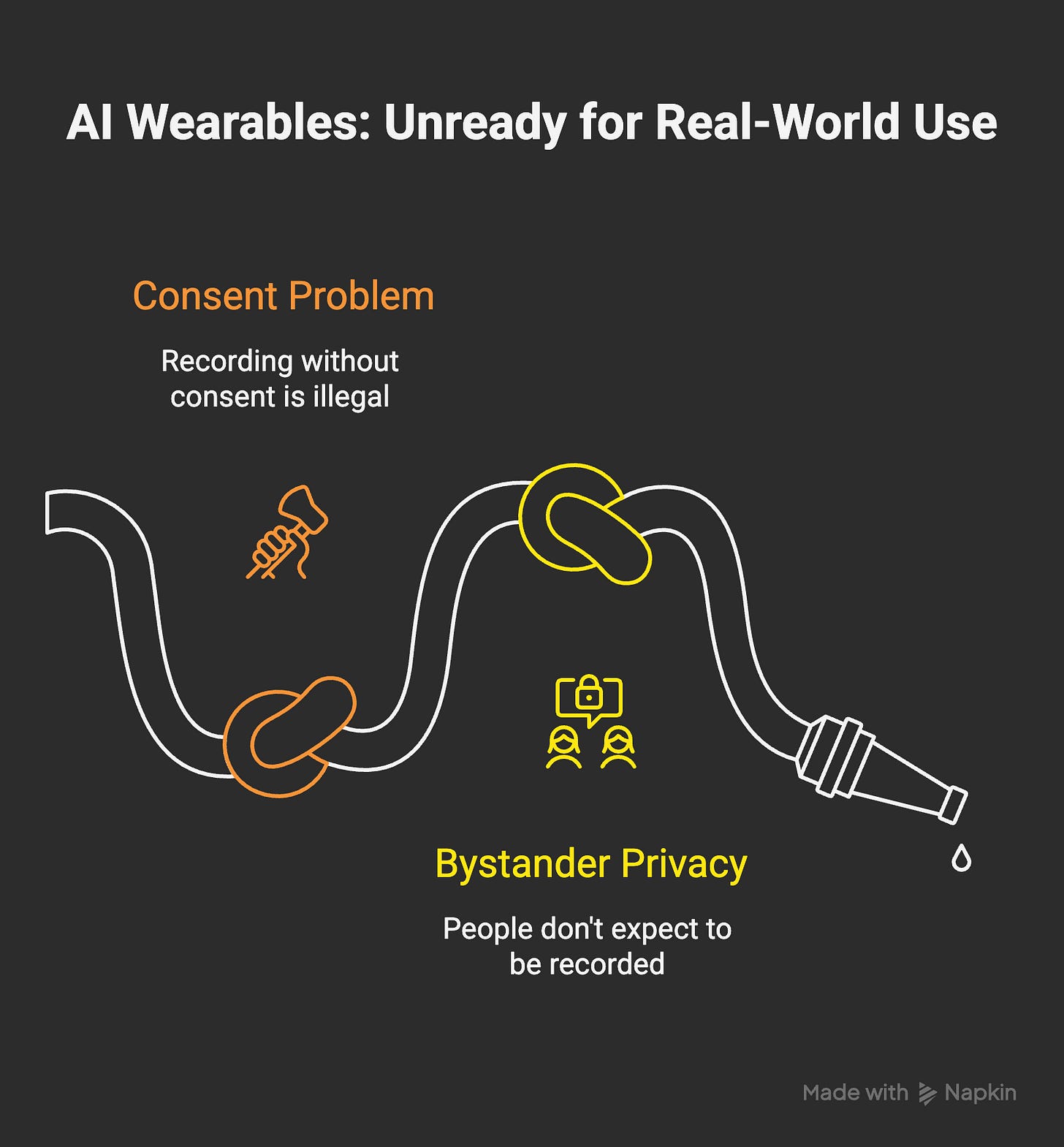

The Consent Problem Is Unsolvable

In many countries, recording someone without consent is illegal. Every time I wore my pendant, I had to decide: do I tell everyone? Do I turn it off? Do I just... hope they don’t notice?

I stopped wearing it to social gatherings. Then to casual meetings. Eventually, it sat on my desk during calls I explicitly wanted transcribed—which my phone can do anyway.

Bystander Privacy Is Real

People meeting you don’t expect to leave behind their entire conversation in audio files. They don’t understand how the data will be used, shared, or stored.

The social cost is higher than the benefit. When people see you wearing one of these devices, they assume they’re being recorded. That changes everything about how they interact with you.

The Future Is Hybrid (Local + Cloud)

Here’s what I believe now: AI memory only works when it’s local-first.

Apple’s privacy-first AI strategy is pointing the right direction. CES 2026 showed Intel chips running 120B parameter models entirely on-device.

The architecture that actually works:

Local processing first — Sensitive data never leaves your device

Cloud for heavy lifting — Complex reasoning sent anonymized when needed

You own the files — Markdown, not proprietary databases

Transparent storage — See exactly what the AI “remembers”

Research shows a “router” approach can reduce calls to cloud models by 40% with no quality drop. Local for personal data. Cloud for computational tasks.

This isn’t just about privacy paranoia. It’s about sustainability. When Limitless got acquired, my data became worthless because it was locked in their cloud. With local files, I keep them forever.

What I Actually Did With My Data

When I got that 14-day notice, I had a choice: delete everything or build something better. I chose to build.

Step 1: Export Everything

Limitless let me download all transcripts as JSON files. 6 months of conversations, ~10GB of audio and text.

Step 2: Delete Account and Data

I didn’t trust Meta with even a backup. Full deletion. Verified it was gone from their servers.

Step 3: Structure With Claude Code

Here’s where it gets interesting. I used Claude Code to process my transcripts:

I have 6 months of conversation transcripts from an AI wearable.

Help me:

1. Extract key decisions and commitments

2. Identify recurring themes and topics

3. Create a personal knowledge base I can reference

4. Remove noise (small talk, background, duplicate content)

Claude Code helped me:

Parse JSON transcripts into clean markdown

Extract ~200 actually meaningful conversations from thousands

Create topic clusters (work projects, personal goals, people I mentioned)

Build a CLAUDE.md file that captures who I am, what I care about, and what I’ve decided

Step 4: Build Local Memory

I now use Basic Memory and MCP Knowledge Graph for persistent context. Everything lives in markdown files on my machine.

When I talk to Claude now, it actually knows me—because I gave it structured context I control, not 10GB of raw audio it can’t process.

The Omi Alternative (If You Still Want Hardware)

If you want the pendant experience without Big Tech ownership, Omi is the open-source alternative.

Why it’s different:

Open source — Hardware and software both

Self-hostable — Your data stays on your servers

No vendor lock-in — Community plugins, extensible

HIPAA compliant — Actually, not just marketing

$89 — Not $99/month subscription locked

Most Limitless users migrated to Omi. Some even jailbroke their Limitless pendants with Omi firmware. The downside? It requires technical knowledge. But that’s the point—if you understand what you’re building, you understand what you’re risking.

What This Experience Taught Me

Never Trust Proprietary AI Memory — If your “memory” lives on someone else’s server, it’s not yours. One acquisition, one policy change—and it’s gone.

LLMs Aren’t Ready for Total Recall — MIT’s new RLMs might change this, but today’s models can’t meaningfully process months of unstructured conversation.

The Privacy Trade-Off Isn’t Worth It — I gave away 6 months of intimate conversations for an AI that couldn’t remember what I said 3 months ago.

Local-First Is the Future — CES 2026 proved local AI is ready. On-device processing, private by default, yours forever.

Structure > Volume — 200 well-organized notes beat 10GB of raw transcripts. Curation matters more than capture.

🛠️ Your Personal Data Toolkit: Claude Code Prompts

Whether you’re leaving Limitless, Omi, Plaud, Bee, or any other voice AI hardware, here’s a practical toolkit for taking control of your data. These prompts work with Claude Code (or any capable LLM with file access).

Prompt 1: Initial Data Assessment

I have exported voice transcripts from [DEVICE NAME] in the /transcripts folder.

First, analyze the data structure:

1. How many files? What formats?

2. What date range do they cover?

3. Estimate total word count

4. Identify any patterns in file naming

Then give me a summary of what I'm working with.

Prompt 2: Topic Extraction & Clustering

Analyze my transcripts and create a topic map:

1. Identify the main themes/topics I talk about

2. Group related conversations together

3. For each topic cluster, list:

- Key insights or decisions mentioned

- People referenced

- Action items or todos

- Unresolved questions

Create a markdown file: topics-overview.md

Prompt 3: Build Your Personal CLAUDE.md

Based on my transcripts, create a CLAUDE.md file that captures:

## About Me

- What I work on

- My communication style

- Key projects/interests mentioned

## Preferences

- How I like to approach problems

- Tools/methods I prefer

- Pet peeves or things I avoid

## Key Context

- Important people in my life/work

- Ongoing projects or goals

- Decisions I've made and why

This file will be used to give AI assistants context about me.

Prompt 4: Extract Meeting Summaries

Find all conversations that look like meetings (multiple speakers,

structured discussion). For each meeting:

1. Date and participants (if identifiable)

2. Main topics discussed

3. Decisions made

4. Action items with owners

5. Open questions or follow-ups needed

Output as: meetings/YYYY-MM-DD-[topic].md

Prompt 5: Create Searchable Knowledge Base

Transform my transcripts into a searchable knowledge base:

1. Create an index.md with all topics alphabetically

2. For each major topic, create a dedicated file with:

- Summary of my views/knowledge

- Key quotes from transcripts

- Related topics (cross-links)

- Sources/dates of conversations

Structure: knowledge-base/

├── index.md

├── [topic-1].md

├── [topic-2].md

└── ...

Prompt 6: Privacy Scrub Before Sharing

I want to share some insights from my transcripts publicly.

Help me scrub sensitive data:

1. Identify all personal names, companies, specific numbers

2. Flag potentially sensitive business discussions

3. Create anonymized versions where needed

4. Suggest which content is safe to share vs. keep private

Output: shareable/ folder with cleaned content

💡 Pro tip: Start with Prompt 1, then run Prompt 3 to create your CLAUDE.md. This single file becomes incredibly powerful - any AI assistant that reads it will immediately understand your context, preferences, and working style.

This Applies to All Voice AI Hardware

While my experience was with Limitless Pendant, the same issues plague virtually every voice AI wearable on the market:

Omi (Friend) - Open source, but still cloud-dependent for AI processing. Same context window limits apply.

Plaud Note - Great hardware, but transcripts go through their cloud. No local processing option.

Bee AI - Promising concept, but faces identical LLM memory constraints when scaling to months of data.

Humane AI Pin / Rabbit R1 - Already struggling with basic queries. Long-term memory is not even on the roadmap.

The fundamental problem is architectural, not device-specific. Until we have either:

Truly infinite context windows (unlikely due to computational costs)

Sophisticated local-first AI that can run on-device

Hybrid architectures with smart retrieval (RAG done right)

...these devices will continue to disappoint anyone hoping for true “AI memory” of their lives.

Related Reading from Digital Thoughts

This is part of my ongoing exploration of voice AI, personal data, and building systems that actually work. Here’s more from the journey:

Voice AI Hardware: Limitless Pendant Real-World Review - My original review and automation experiments with the Pendant

Limitless Pendant: Meta Acquisition & AI Wearable Lessons - What we can learn from Meta’s interest in this space

Building an AI Memory Palace: Personal Digital Mind - The broader vision of connected personal intelligence

Cursor vs Google AI Studio: IDE Comparison 2025 - Tools I use for coding with AI (including Claude Code)

The Bottom Line

Limitless promised AI memory. What they delivered was a transcription service that got sold to Meta and banned me from using it.

But I don’t regret the experiment. I learned what AI wearables can and can’t do, why privacy matters more than convenience, how to build local-first AI memory that actually works, and that the future is hybrid—local context, cloud reasoning.

If you’re still using a cloud-based AI wearable, ask yourself: What happens when the company gets acquired? Can you export your data today? Do you own your memory, or does Big Tech?

For me, the answer is now clear. My AI memory lives in markdown files on my machine, structured with Claude Code, and processed locally first. That’s not as sexy as a pendant that “remembers everything.” But it actually works.

Action Items

If you’re using Limitless (or any AI wearable):

Export your data TODAY (don’t wait for the deadline)

Review what’s actually in those transcripts

Consider local alternatives (Omi, Basic Memory, MCP tools)

Structure your data before feeding it to AI

If you’re considering AI wearables:

Ask about data ownership and export

Check if they operate in your region

Understand the privacy laws where you live

Consider phone-based alternatives first

Have you been affected by the Limitless EU ban? I’d love to hear your story in the comments.