When Your AI Wearable Gets Acquired by Meta: The Limitless Pendant Story

The rise, fall, and lessons from six months with the Limitless Pendant

So here’s a story about being an early adopter in the AI hardware space, and why sometimes the biggest risk isn’t the technology... it’s the company behind it.

About six months ago, I got my Limitless Pendant. You know, that AI wearable that promised to be your always-on memory assistant. The one you clip to your shirt that records everything, transcribes it, and supposedly gives you superpowers of recall and insight. I even wrote about my early experiments with it... and honestly? I went all in.

The Honeymoon Phase

I wasn’t just using the dedicated app like a normal person. No, I went full automation mode. I connected it through their API to build custom workflows with Make and Zapier. When I mentioned specific topics in conversations, it would automatically create tasks in my task manager. When I started a reflection session, it would spin up a new page in my journal.

Was it perfect? Hell no.

The transcription would occasionally throw weird artifacts into my automations. I’d have to go back and fix things. Most of my experiments stayed at the “interesting but janky” level. The only workflow that really clicked was the journal entry automation... probably because there was very little room for the AI to misinterpret what I was doing.

But here’s the thing... despite the rough edges, I saw something real. The Limitless app kept getting better. It started surfacing genuinely interesting insights from my reflections. It would catch patterns I wasn’t seeing. That’s the promise of AI hardware that’s always with you, right? It has all the context. It knows what you’ve been thinking about, working on, worrying over.

There’s something powerful about that level of ambient awareness.

Then Came THE EMAIL

You know the one. The “We’re updating our Privacy Policy and Terms of Service” email that actually matters.

The email that told me, as an EU user, that my Pendant would stop working.

Just... done. No more service.

For US users, it was somehow even worse. They could keep using it, but under terms that basically said “everything that happens with your data is your problem now, and by the way, we can do pretty much whatever we want with it.” The new terms essentially shifted all liability to users while giving Limitless broad rights to use the data however they wanted.

The developer Slack channel? Absolute chaos. People were furious. And rightfully so... many of us had waited over a year to get our Pendants. We’d been patient through delays, through beta bugs, through all of it. Early adopters who paid $49 in pre-orders, or $399 at launch. People who had generated months of deeply personal conversation data. And now, suddenly, it was a paperweight.

The Meta Bombshell

And then the real news dropped.

CEO Dan Siroker posted a video on December 5th, 2025. Limitless had been acquired by Meta.

Meta. Facebook’s parent company. The company with one of the worst privacy track records in tech history. The company that had just been fined billions for privacy violations in Europe.

The same Dan Siroker who had built the company on a personal story about hearing loss and human augmentation. The same team that had raised $33 million from Andreessen Horowitz and Sam Altman on the promise of privacy-first AI wearables.

Sold to Meta.

Slack went from angry to nuclear. The betrayal wasn’t just about losing functionality... it was about the complete reversal of everything the company had stood for. Privacy-first? Gone. User control? Gone. The entire ethos that made people trust them with the most intimate data possible... their thoughts, their conversations, their daily lives.

Gone.

The Open Source Lifeline (That I Didn’t Take)

Plot twist: the open-source company behind Omi swooped in. Through some clever Bluetooth reverse engineering, they added support for the Limitless Pendant. It was a genuine community effort... developers who saw abandoned hardware and decided to give it new life.

I tried it for a few days. It was actually pretty nice... more integrations, built-in Zapier support, and the whole open-source angle felt better from a privacy perspective. The community was supportive, the documentation was decent, and it worked.

But then I started really thinking about the value proposition. Not just for Omi, but for the entire category of AI wearables.

The Real Problem Nobody Talks About

I had collected about 10GB of audio data and transcripts from my Pendant over six months.

And here’s the uncomfortable truth... there’s no LLM that can actually make good use of that much conversational data. Not really. Not in the way the promise suggested.

There’s no sufficiently efficient vector database to truly leverage that volume of natural language context. I saw the limitations in the API, in MCP integrations, and eventually in the Limitless app itself. The app would surface interesting insights here and there... but it couldn’t maintain coherent understanding across months of recordings.

The AI could tell me what I talked about last Tuesday. It could search for keywords. It could summarize individual conversations. But could it truly understand the arc of my thinking across weeks and months? Could it connect disparate ideas I’d mentioned in different contexts? Could it become the “extended brain” that the marketing promised?

Not really.

And for people like me who live digitally... I still had to manually create automations to pull useful context from all my other tools and somehow merge it with Limitless. My tasks were in Notion. My meetings were in Google Calendar. My projects were scattered across multiple systems. The Pendant only saw the slice of my life that happened in spoken conversation.

It wasn’t efficient. It was duct tape and hope.

And then I realized something else: I can already do reflection with AI in Notion using the meeting transcription feature. And honestly? I get better results. It’s more deterministic. More reliable. More integrated with where I actually work. The context is richer because it sits alongside my tasks, my notes, my projects.

The Pendant was solving a problem that... maybe wasn’t actually that big of a problem for me after all.

What I Learned

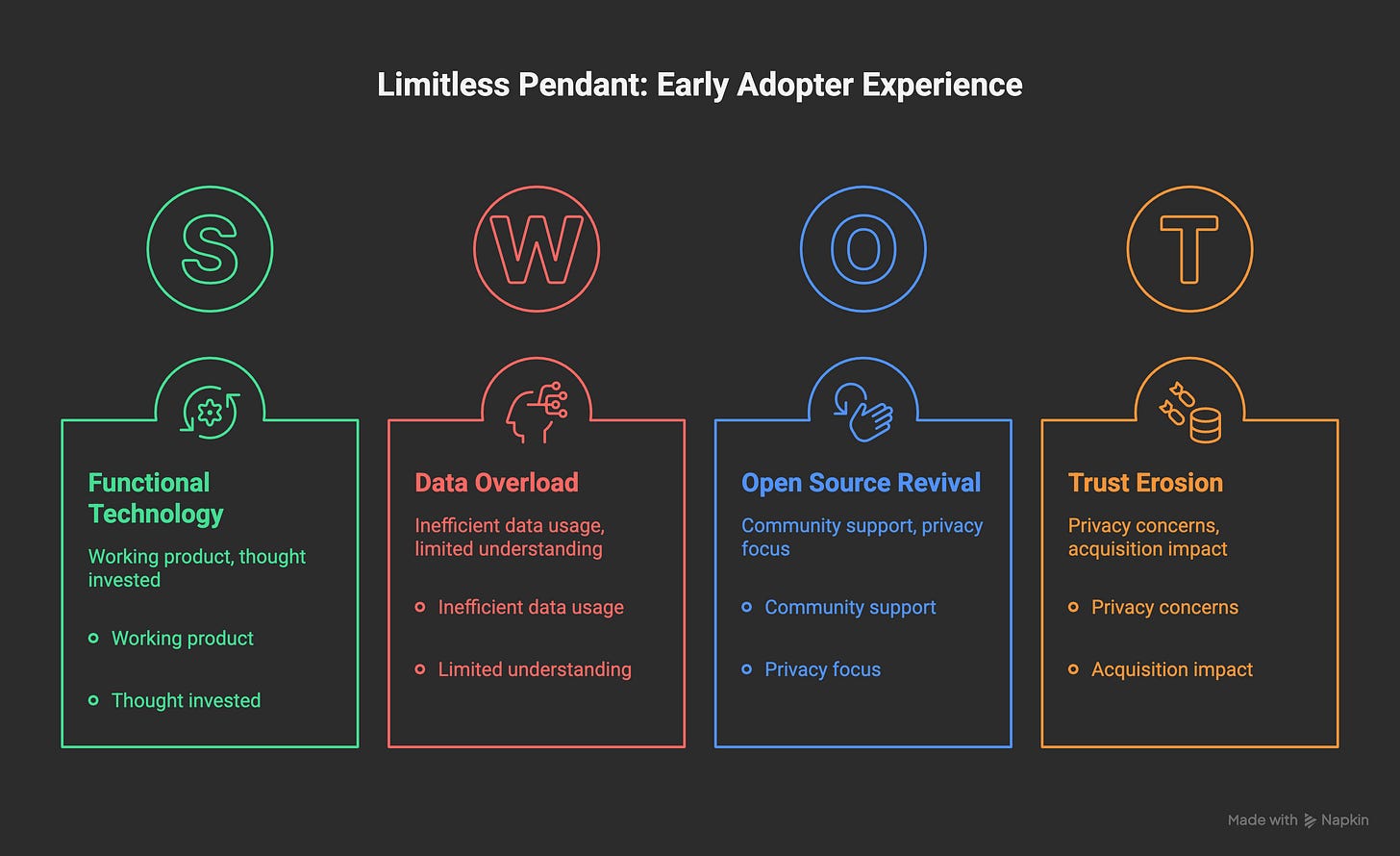

This isn’t a “Limitless was bad” post. The technology worked. The team clearly put thought into the product. In those early weeks, I genuinely believed I was using the future.

This isn’t even purely a “Meta is evil” post, though... you know, the privacy implications of that acquisition speak for themselves.

This is an “early adopter risk actualized” post.

When you’re on the bleeding edge of AI hardware, you accept certain risks:

The product might not work as promised

The technology might not be ready

The company might pivot

The economics might not work out

But what happened here was a different kind of failure. It was a communication failure. A trust failure.

Limitless had early adopters who:

Waited a year or more for hardware to ship

Paid $49 to $399 to be part of the vision

Spent hours building integrations and workflows

Generated months of deeply personal data

Trusted the company with their most private thoughts and conversations

And then the company:

Made sudden, unilateral policy changes with minimal warning

Cut off EU users entirely due to regulatory concerns

Announced an acquisition to a company with a terrible privacy reputation

Provided minimal communication about what would happen to user data

Showed little consideration for the community that had supported them

The Slack channel wasn’t just angry... it was hurt. These were true believers who felt betrayed. And you know what? They had every right to feel that way.

When you build a product based on intimate personal data, you’re not just building technology. You’re building a covenant of trust. And Limitless broke that covenant in the most public way possible.

What This Means for AI Wearables

I still think there’s something here. The idea of ambient AI that has full context of your life... that’s compelling. The vision of augmented memory, of never forgetting important details, of having AI that truly understands your context.

That vision is still worth pursuing.

But the execution isn’t ready yet. And I don’t just mean the technology.

The technical challenges:

We don’t have vector databases that can efficiently process months of conversational data

LLMs still can’t maintain coherent long-term understanding across that much context

Privacy-preserving on-device processing isn’t powerful enough yet

Battery life, audio quality, and transcription accuracy need to improve

The business model challenges:

AI wearables are expensive to build and ship (hardware is hard)

The subscription revenue needed to cover ongoing AI processing costs is substantial

The market is still tiny and early (10,000 units sold is a lot for a startup, nothing for a sustainable business)

Exit pressure from VCs pushes companies toward acquisitions over long-term sustainability

The trust challenges:

Users need guarantees about what happens to their data

Companies need credible commitment mechanisms that they won’t just sell to the highest bidder

Regulatory frameworks across different regions make global products nearly impossible

The incentives of ad-funded tech giants (like Meta) are fundamentally incompatible with privacy-first hardware

Maybe in a few years, we’ll have:

The infrastructure to actually process and make use of continuous conversational data

Better privacy frameworks that protect users even when companies get acquired

Companies that understand the covenant they’re making with early adopters

Business models that don’t require compromising user privacy to achieve profitability

But that time isn’t now.

The Aftermath

So the Pendant sits in a drawer. Ten gigabytes of my life, exported and archived. Another experiment complete. Another lesson learned about the gap between vision and reality in AI hardware.

The data export process was straightforward, at least. Limitless deserves credit for that... they made it easy to get your data out. JSON files with all the transcripts, audio files if you wanted them, searchable and portable.

But what do you do with 10GB of conversation transcripts? Put them in a folder somewhere and maybe search through them occasionally when you’re feeling nostalgic? Train your own local AI model? (Good luck with that.) The data is portable in theory, but practically... it’s just dead weight.

And honestly? I don’t regret it.

This is what being an early adopter looks like. You pay the early adopter tax... sometimes in money, sometimes in time, sometimes in disappointment.

But you learn.

You learn what works and what doesn’t. You learn where the technology is actually ready and where it’s still vapor. You learn to read the signals of a struggling startup versus a sustainable business. You learn which promises are achievable and which are just compelling narratives for fundraising decks.

You learn that sometimes the biggest risk isn’t the technology failing... it’s the company succeeding just enough to become an acquisition target.

For Other Early Adopters

If you’re reading this and you’re also sitting on a now-defunct AI wearable, or you’re considering backing the next one on Kickstarter, here’s what I’d suggest:

Before you buy:

Can the company articulate a path to profitability that doesn’t involve selling your data?

Is the core technology on-device or cloud-dependent? (Cloud-dependent = you’re one policy change away from a paperweight)

What happens to your data if the company shuts down or gets acquired?

Is there an open-source alternative that could provide a lifeboat if things go south?

After you buy:

Export your data regularly (don’t wait for the shutdown announcement)

Build automations and integrations that reduce lock-in

Engage with the community so you hear the warning signs early

Treat it as an experiment, not a permanent solution

When things go wrong:

Make noise (companies respond to public pressure)

Support open-source alternatives if they exist

Share your experience so others can learn from it

Remember that the early adopter tax is real, and sometimes you pay it

What’s Next for Me

I’m not done with AI wearables. I’m still fascinated by the category. But I’m approaching it differently now.

I’m more interested in:

On-device processing that doesn’t depend on cloud services

Open-source solutions where the community owns the roadmap

Integration-first approaches that connect to tools I already use

Privacy-preserving architectures that don’t require trusting a single company

I’m also more realistic about what’s actually possible with current technology. The vision of an AI that knows everything about you and helps you make better decisions? That’s still science fiction. But an AI that helps you capture and search your thoughts? That’s doable, and Notion’s meeting transcription already does it well enough for my needs.

Maybe the future of AI memory isn’t a pendant or a pin or glasses. Maybe it’s just better integration between the tools we already use every day. Maybe it’s less about capturing everything and more about capturing the right things.

I don’t know yet. But I’ll keep experimenting. Because that’s what early adopters do.

We pay the tax, we learn the lessons, and we share what we learned so the next wave can do better.

The Lessons

For users:

Early adoption is a gamble. Sometimes you get a great product at a great price. Sometimes you get a paperweight and a lesson. Both have value, but only if you’re honest about the risks going in.

For founders:

When you build products based on intimate personal data, you’re making a promise. That promise is bigger than your cap table, bigger than your acquisition offers, bigger than your growth targets. Break that promise, and you lose more than customers... you lose the community of believers who made your company possible in the first place.

For the industry:

AI wearables are still in the “convince people it’s not creepy” phase. Every broken promise, every privacy breach, every startup that sells out to an ad company... that sets the entire category back. We need better frameworks, better business models, and better alignment between what we promise and what we deliver.

Epilogue: As I’m writing this, Omi is still supporting the Limitless Pendant hardware through their open-source app. If you’ve got one sitting in a drawer, it might be worth checking out. The community is good, the privacy model is better, and at least you’d get some use out of the hardware you paid for.

But for me? The experiment is over. The lessons are learned. And the next AI wearable I try will need to clear a much higher bar.

PS. How do you rate today’s email? Leave a comment or “❤️” if you liked the article - I always value your comments and insights, and it also gives me a better position in the Substack network.