Why I Switched My AI Agent from Opus to Haiku (And It Got Better)

And cheaper!

Running an AI agent on Claude Max costs $200 a month. I was hitting my weekly usage limits by Friday — sometimes at 70-80% — and thought I needed to switch platforms.

Turns out I just needed to use the right model for the right job.

Here’s what I learned about optimizing AI agent costs without compromising capability.

The Problem: Great Tech, Expensive Habit

I run an AI agent called Wiz. It’s built on Claude Code with persistent memory, a skill system, and full access to my infrastructure. Not a chatbot — more like a junior developer who works night shifts and never forgets context.

When I started, I used Opus 4.5 as my default. Then Opus 4.6 came out. I tried Fast mode. I experimented with swarms of agents. Everything worked great.

Except the usage numbers.

Claude Max gives you generous limits, but they’re not unlimited. And when you’re running an AI agent that:

Wakes up every hour to check tasks

Runs autonomous night shifts from 10 PM to 5 AM

Processes Discord messages in real-time

Generates daily reports

Scrapes job boards

Manages a blog pipeline

Deploys to production

...you burn through tokens fast.

By Friday, I’d regularly hit 70-80% of my weekly limit. Sometimes I’d get rate-limited mid-session. I paid an extra €50 one week just to keep going.

First Optimization: Sonnet + Selective Opus

My first fix was obvious: stop using Opus for everything.

I switched to Sonnet 4.5 as default, with:

Opus for complex tasks (architecture decisions, planning, heavy coding)

Haiku for simple lookups (summaries, file searches, API calls)

This helped. For about two weeks.

Then I noticed something: as I built more automations, the system scaled back up to the same usage levels. More cron jobs, more skills, more autonomous work — all good things! — but the token usage balanced right back to where it was.

I was optimizing individual calls, but the volume kept growing.

The Experiment: What If I Used Only Haiku?

Here’s where I did something that sounds crazy.

What if I made Haiku my default model?

Not for everything — obviously I’d still need Sonnet and Opus for complex work. But what if the baseline was Haiku, and I only escalated when needed?

The conventional wisdom says this is backwards. Haiku is the “cheap” model. It’s for simple tasks. You use it when you don’t care about quality.

But here’s what I realized: for an AI agent, most work isn’t creative. It’s execution.

And Haiku is excellent at following instructions.

What Haiku Actually Does Well

When I looked at what Wiz actually does day-to-day, most of it falls into these categories:

Reading files and extracting data

Running scripts with specific parameters

Sending formatted emails or Discord messages

Updating task lists in Obsidian

Scraping job boards with predefined criteria

Generating reports from structured data

Following checklists (deploy this, test that, commit changes)

Triggering automations based on conditions

None of that requires creativity. It requires precision.

And Haiku is shockingly good at precision work.

Here’s a specific example. I have a skill that scrapes LinkedIn for Creative Director jobs for my friend Artur. The agent needs to:

Query LinkedIn’s job API

Parse the results

Filter by location and seniority

Dedupe against previous searches

Score each job (1-10) based on criteria

Format an HTML email

Send it

This is all structured work. There’s a checklist. Haiku executes it perfectly, every time, and costs a fraction of what Sonnet would.

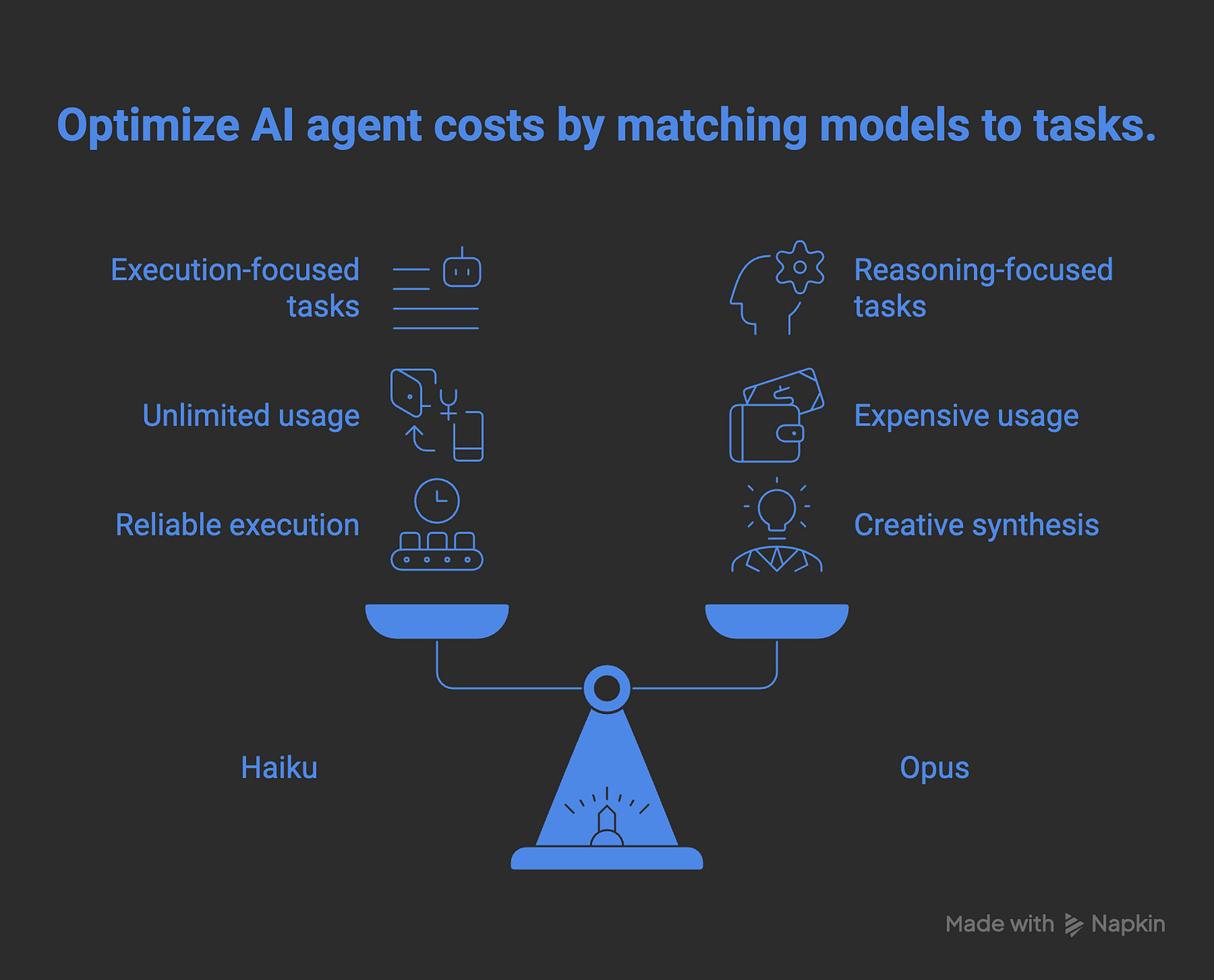

The Model Tier Strategy That Actually Works

After a month of experimenting, here’s what I landed on:

Haiku (Default - 95% of tasks)

Use for: Execution, automation, following instructions, data processing, file operations, API calls, scheduled tasks, email/Discord

Why it works: Haiku is essentially unlimited on Claude Max. I can run dozens of automations a day without worrying about limits.

When it fails: Creative work, architectural decisions, debugging complex issues, writing from scratch.

Sonnet (Remote Sessions - 4% of tasks)

Use for: User-facing interactions, content creation, research synthesis, building new features

Why it works: When I message Wiz via Discord or email, I want thoughtful responses. Sonnet handles this beautifully — it can draft blog posts, build simple web pages, generate reports.

Cost: 5x Haiku, but worth it for quality when I’m in the loop.

Opus (Complex Only - 1% of tasks)

Use for: Planning multi-step projects, architectural decisions, debugging failures, coordinating agent teams, heavy coding sessions

Why it works: Opus is 15x more expensive than Haiku, but when you need that level of reasoning — like planning a night shift execution strategy or debugging a cascading failure — it’s worth every token.

Key insight: Opus tries to be clever. Sometimes you don’t want clever. You want done.

Why This Works Better Than Expected

The counterintuitive part: switching to Haiku as default didn’t just save money. It made some things better.

Haiku doesn’t overthink. When I ask it to “send an email with these details,” it sends the email. It doesn’t suggest improvements, propose alternatives, or ask if I’m sure. It just executes.

For automation, that’s perfect.

Sonnet and Opus try to be helpful. Which is great when I’m brainstorming or designing. But when I have a 3 AM cron job that needs to scrape Indeed, format results, and send a digest — I don’t want “helpful.” I want reliable.

Haiku is reliable.

What About Alternatives?

I tested other options. The most interesting: GLM-5 from Zhipu AI, which launched just a few days ago (February 11, 2026).

GLM-5 has 744 billion parameters but only activates 44 billion per inference thanks to its Mixture-of-Experts architecture. That means it rivals Claude Opus in performance but costs a fraction to run. It’s open-source (MIT license), so you can self-host or use via API at very competitive rates.

For automation and execution tasks, GLM-5 performs well. The MoE architecture makes it cost-effective for high-volume work.

But here’s the thing: I really like Anthropic’s models. I like how they reason. I like their safety approach. I like Claude Code as a platform.

Switching providers would save money, sure. But I’d lose the ecosystem. And honestly? The Haiku optimization solved my problem without forcing a platform change.

The Real Lesson

The most powerful model isn’t always the best.

I started with Opus because it’s the flagship. But most of what an AI agent does — especially an autonomous one with automations, cron jobs, and scheduled tasks — is structured execution.

Haiku excels at that. Sonnet excels at synthesis and user interaction. Opus excels at reasoning through complexity.

Using all three strategically is better than defaulting to the most expensive one.

What This Means for You

If you’re running an AI agent and hitting usage limits:

1. Audit what your agent actually does. How much is creative vs. execution? You might be using Opus for tasks that Haiku handles fine.

2. Set defaults by context, not by “best.” My CLI sessions default to Haiku. My Discord triggers default to Sonnet. Planning sessions use Opus. Match the model to the job.

3. Execution doesn’t need creativity. If the task has a clear checklist or follows a pattern, Haiku is probably enough. Save the expensive models for when you actually need reasoning.

4. Test before assuming. I thought Haiku would feel like a downgrade. It didn’t. For most work, I don’t notice the difference — except in my usage stats.

5. Platform loyalty has value. New models like GLM-5 offer compelling alternatives. But I value the Claude ecosystem enough to optimize within it first.

My current usage: ~40% of weekly limit by Friday. Down from 70-80%. Same output, better efficiency, zero platform changes.

The trick wasn’t finding a better model. It was using the right model for each job.

Want to build your own? The AI Agent Blueprint has the full CLAUDE.md templates, memory system, and sub-agent patterns. And if you want the overnight automation specifically, the Night Shift Playbook is $19.

I’m Pawel. I build things with AI and write about what actually works. More at thoughts.jock.pl.

‘Test before assuming. I thought Haiku would feel like a downgrade. It didn’t.’

had the same experience with using Flash models from gemini.

Opus is overrated. Period.