Are We Actually Ready to Invite Robots Into Our Homes?

(Spoiler: NEO’s “Expert Mode” Makes Me Think We’re Not)

Hey digital adventurers... so I need to talk to you about something that’s been absolutely consuming my thinking lately and honestly the more I dig into it the more conflicted I become

We’re at this wild moment in technology history where humanoid robots are transitioning from science fiction to actual products you can pre-order right now. Like... you can literally go to a website today and put down money for a robot that will live in your house and do your laundry. That’s not a distant future thing. That’s happening NOW.

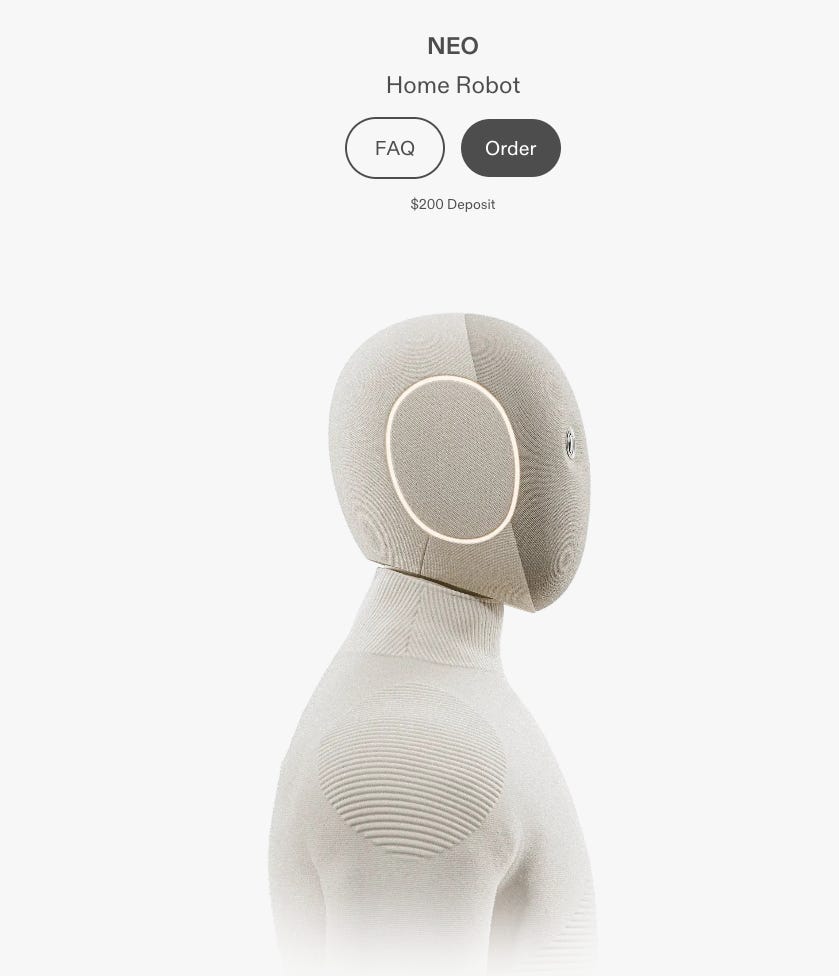

And the flagship example everyone’s talking about is NEO from 1X Technologies. It launched for pre-orders on October 28, 2025, and on paper it looks incredible. Really incredible. But here’s the thing... the more I learn about how it actually works, the more I realize we might be rushing into something we haven’t fully thought through.

So let’s dig into this because I think there’s a much bigger conversation we need to have about the intersection of AI, physical robotics, privacy, and whether we’re truly ready for what’s coming.

What NEO Actually Is (And Why It Matters)

Okay first let me give you the technical rundown because understanding what NEO actually IS helps explain why I have such mixed feelings about it.

NEO is a humanoid robot developed by 1X Technologies (formerly Halodi Robotics), a Norwegian-American company backed by the OpenAI Startup Fund among others. They’ve raised over $125 million and they’re going HARD on this vision of bringing robots into homes as genuine household assistants.

Here are the specs that matter:

Physical Characteristics:

Height: 5 feet 6 inches (168 cm) - deliberately designed to be non-threatening

Weight: 66 pounds (30 kg) - incredibly light compared to competitors

Soft 3D lattice polymer body with machine-washable knit suit

No pinch points anywhere on the body for safety

Capabilities:

Maximum lift: 154 pounds

Carry capacity: 55 pounds

Walking speed: 1.4 m/s, running up to 6.2 m/s

75 degrees of freedom total (22 DOF per hand!)

Battery: 842 Wh giving about 4 hours runtime

The Really Innovative Stuff:

Tendon-driven actuation system (motors at base, tendons transmit force)

95% backdrivability (you can easily move it by hand)

Only 22dB noise level (quieter than a refrigerator)

NVIDIA Jetson Thor for onboard AI processing

Redwood AI model with 160 million parameters

Price:

$20,000 one-time purchase OR

$499/month subscription

Deliveries starting 2026 in US, 2027 internationally

On paper this looks fantastic right? They’ve clearly thought hard about safety... the soft body, the light weight, the tendon drive system that makes it compliant rather than rigid. The dexterity with 22 DOF hands is impressive. The price point while high is actually reasonable compared to competitors.

But here’s where things get complicated and honestly kind of disturbing...

The “Expert Mode” Problem (Or: Why I’m Calling This Cringe)

So NEO has this thing called “Expert Mode” and when I first learned about it my initial reaction was... wait what. And the more I understood it the more uncomfortable I became.

Here’s how it works:

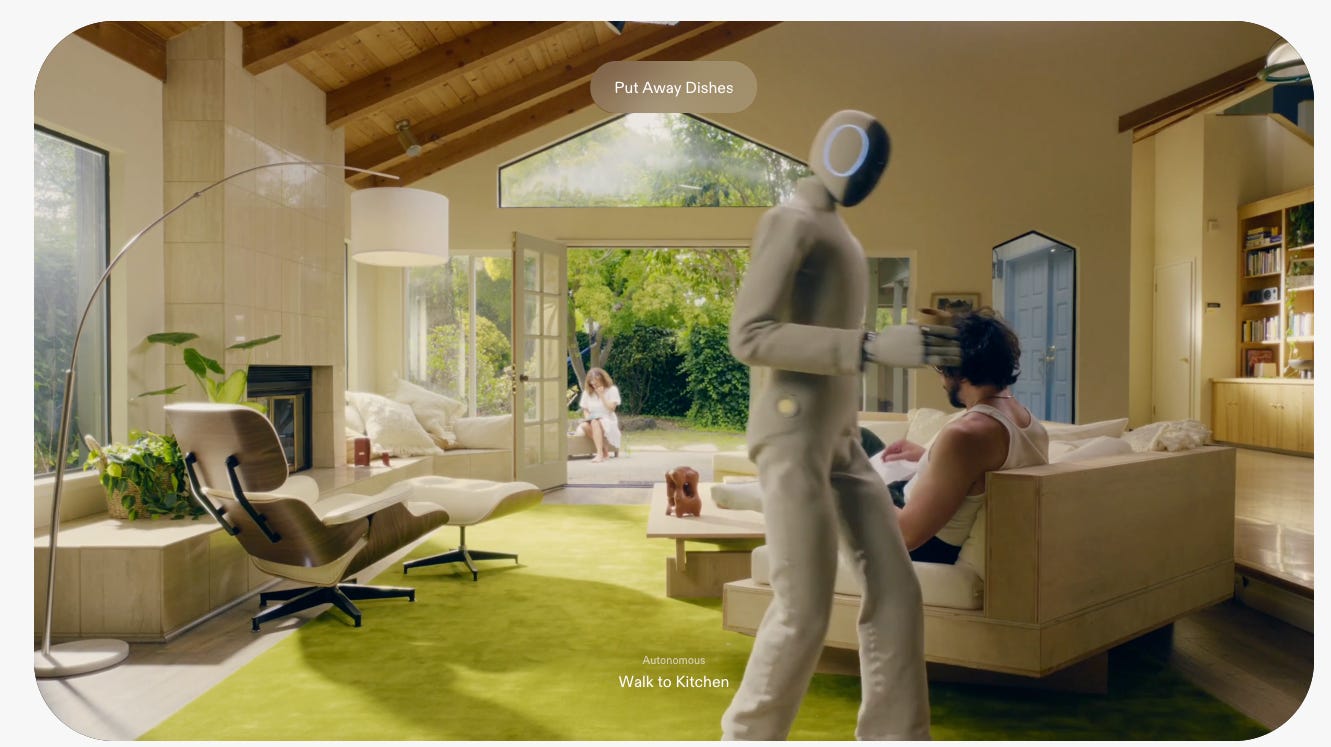

When NEO encounters a task it hasn’t learned to perform autonomously yet, you can schedule a session where a 1X employee - they call them “1X Experts” - remotely controls your robot via VR headset. They see through NEO’s cameras, control its movements, and essentially pilot it around your house to complete whatever task you need done.

The stated purpose is twofold:

Complete tasks NEO can’t do autonomously yet

Generate training data to teach NEO’s AI through demonstration

Now... 1X has implemented some privacy protections:

You have to approve each remote access session in advance

Faces are blurred in the camera feed

You can designate “no-go zones” the operator can’t enter

All sessions are recorded for accountability

Access only during scheduled times

But come on. Let’s be real here for a second.

You’re literally inviting a stranger to remotely control a robot that can see and move around inside your home.

This is the part where I need to be completely honest with you... I find this deeply problematic. And I’m not alone - multiple reviewers and privacy advocates have raised serious concerns about this model.

Think about what you’re actually agreeing to:

A remote operator sees your living space through high-resolution cameras

They can observe your furniture, your belongings, your personal items

They might see family photos, mail on the counter, what’s in your fridge

They’re present during intimate moments of your daily life

You’re trusting that face-blurring works perfectly every time

You’re trusting that operators are properly vetted and supervised

You’re trusting that recorded sessions are secured and never leaked

You’re trusting that no operator ever has bad intentions

And for what? To fold your laundry while you’re not home?

Why This Feels Like We’re Not Ready

Look I’ve been deep in the AI and automation space for years now. Remember when I wrote about my AI co-CEO experiment where I had ChatGPT help run an actual business? I’m not anti-AI or anti-automation by any means. I’m literally building an AI-orchestrated e-commerce system right now where AI handles most operations autonomously.

But here’s the fundamental difference... when AI operates in digital space, the blast radius of failure is contained. If my AI system makes a mistake managing inventory or responding to a customer email, it’s fixable. Annoying maybe but fixable.

When AI operates in PHYSICAL space - especially in your HOME - the stakes change completely.

And when that AI isn’t even fully autonomous yet... when it relies on humans remotely operating it... we’re essentially running a beta test of physical AI using people’s actual homes as the testing ground.

That’s not okay. That’s rushing to market before the technology is truly ready.

The Autonomy Reality Check

Here’s what really bothers me... independent reviews of NEO show significant limitations in autonomous operation:

Taking 1+ minute to fetch water from a fridge 10 feet away

5 minutes to load just three items in a dishwasher

Frequent failures requiring multiple attempts

Need for breaks to charge and cool down

Most complex tasks still requiring human teleoperation

Now I get it - this is first-generation consumer humanoid robotics. Of course there are limitations. Of course the AI isn’t perfect yet. That’s expected and honestly kind of beautiful in its own way... we’re watching this technology develop in real-time.

But if the robot CAN’T reliably do tasks autonomously... if it needs humans to remotely control it for anything beyond basic operations... then maybe it’s not ready to be in homes yet?

The CEO Bernt Børnich describes the teleoperation model as a “social contract” with early adopters. You share your home data and help train the AI, and in exchange you get early access to the technology and help improve capabilities for everyone.

That sounds reasonable in theory. But in practice you’re paying $20,000 (or $499/month) for the privilege of being a beta tester who surrenders significant privacy in the process.

The Broader Question: Physical AI vs Digital AI

This connects to something I’ve been thinking about a lot lately as I work on my AI integration projects and automation systems...

There’s a HUGE difference between AI that operates in digital space versus AI that operates in physical space.

Digital AI:

Mistakes are reversible or containable

Privacy violations happen through data, which can be encrypted/controlled

You can easily shut it down or restrict access

Failure modes are annoying but rarely dangerous

Learning happens through data that doesn’t invade physical space

Physical AI in your home:

Mistakes could cause physical harm or property damage

Privacy violations happen through direct observation of your space

A 66-pound robot moving around creates inherent risks

Failure modes could be dangerous (falling, collisions, malfunctions)

Learning requires observation of your actual living environment

When I wrote about when automation makes sense, one of my key points was that automation should handle tasks where the cost of failure is low and the complexity is manageable. Early-stage physical AI in homes doesn’t meet either criteria yet.

The cost of failure is HIGH (privacy violation, physical safety, data breaches). The complexity is ENORMOUS (unstructured home environments, unpredictable situations, constant human interaction).

What The Competition Looks Like (And Why It Matters)

Okay so let’s talk about the broader landscape because NEO isn’t operating in a vacuum...

Tesla Optimus:

Similar $20-30K target price

125 lbs (much heavier than NEO’s 66 lbs)

No confirmed consumer timeline yet

More strength but less focus on safety

Figure 03:

Well-funded ($40B valuation target)

Similar height to NEO (5’6”)

Starting home testing in 2025

Helix AI model with demonstrated multi-robot coordination

Unitree G1/R1:

Dramatically cheaper ($5,900-$16,000)

Targets developers/researchers not consumers

Less safety-focused, more capability-focused

Agility Digit:

$30/hour industrial rental model

Already commercially deployed in warehouses

Proven track record but not designed for homes

Here’s what’s fascinating... the industrial robots like Digit are actually FURTHER along in deployment than consumer robots. Why? Because industrial environments are controlled, structured, and supervised. Warehouses have consistent layouts, clear tasks, and trained personnel.

Homes are the OPPOSITE. Every home is different. Tasks are unpredictable. Users have zero training. Children and pets add chaos. The environment is fundamentally unstructured.

So the companies rushing to put humanoid robots in homes are actually tackling the HARDER problem first. That’s either admirably ambitious or concerningly reckless depending on your perspective.

The Privacy Angle Nobody’s Really Addressing

Let’s dig deeper into the privacy implications because I don’t think this is getting enough attention...

When you have a robot with cameras and microphones operating in your home, you’re creating several layers of potential privacy invasion:

Layer 1: Data Collection

Visual data of your entire living space

Audio recordings of conversations and ambient sounds

Movement patterns and daily routines

What you own, how you organize your space, your lifestyle habits

Layer 2: Data Processing

That data gets processed by AI systems

Potentially stored on company servers

Used to train algorithms

Analyzed for insights about user behavior

Layer 3: Human Access (The Killer)

Remote operators see your actual home through the robot’s eyes

They observe your personal space in real-time

Human operators = human judgment, human mistakes, human bad actors

Recording systems can fail or be bypassed

Vetting processes are never perfect

Layer 4: Data Security

All of this data needs to be secured

Transmission channels need encryption

Storage systems need protection

Access controls need to be ironclad

Now... 1X claims they’ve implemented privacy protections. Face blurring, no-go zones, scheduled access only. That’s good. That’s necessary. But is it SUFFICIENT?

Security researchers have already found serious vulnerabilities in 43% of tested MCP implementations (the protocol systems use to connect AI to external tools). That’s not a small number. That’s nearly HALF showing significant security flaws.

And we’re talking about giving these systems access to... our homes. Our private spaces. The places where we’re most vulnerable.

The “Building In Public” Trap

Here’s where I need to be careful because I’m actually a huge advocate for building in public and I practice it myself constantly...

But there’s a difference between building digital products in public (where users can opt in or out easily) versus building physical AI systems that operate in intimate spaces.

When I built my AI co-CEO system or when I’m building my current AI e-commerce automation, failures are contained. If something breaks, it affects my business operations but not anyone’s physical safety or privacy.

NEO’s approach of deploying to homes to gather training data is essentially building in public... but in people’s actual private spaces. Early adopters become both customers AND beta testers. They pay $20,000 for the privilege of helping train the AI while accepting significant privacy trade-offs.

The counter-argument is valid: “How else do you train a home robot except by operating in actual homes?” You need real-world data. You need varied environments. You need edge cases that you can’t predict in a lab.

But maybe... just maybe... the answer is to be more transparent about what “early adopter” actually means. This isn’t buying a slightly buggy smartphone that might crash occasionally. This is inviting partially-autonomous physical AI into your home that requires remote human operation for many tasks.

That’s a fundamentally different category of early adoption.

Where I Think We Should Actually Be

Alright so let me get philosophical for a minute because I think this ties into bigger questions about technology adoption and societal readiness...

We’re at this interesting inflection point where the technology to build capable humanoid robots EXISTS, but the AI to make them truly autonomous isn’t quite there yet. So companies are using hybrid approaches - partial autonomy supplemented by human teleoperation.

That’s smart from an engineering perspective. It’s a practical way to deploy systems that provide value while continuing to learn and improve.

But from a societal readiness perspective... I’m not sure we’ve fully grappled with the implications.

Questions we should be asking:

Technical:

What happens when the robot malfunctions while no operator is available?

How do we handle edge cases that the AI can’t process?

What’s the backup plan if connectivity fails during critical tasks?

How do we ensure consistent performance across different home environments?

Privacy:

Who owns the data collected by home robots?

What are acceptable uses of that data?

How long should data be retained?

What happens to data if the company is acquired or goes bankrupt?

Economic:

At $20K or $499/month, who actually benefits from this technology?

Does this widen inequality by creating a “robot-assisted” class?

What happens to employment in domestic service industries?

Are we optimizing for genuine need or manufactured desire?

Social:

How does having robots do household tasks affect family dynamics?

What skills might we lose by outsourcing physical tasks?

How do children develop if robots handle chores that teach responsibility?

What’s the psychological impact of AI observing intimate moments?

Ethical:

What are the working conditions for remote operators?

How much should operators be paid for this work?

Is it ethical to sell partially-autonomous systems as “autonomous”?

Where’s the line between helpful assistance and concerning surveillance?

I don’t have perfect answers to these questions. I don’t think anyone does yet. But the fact that we’re PRE-ORDERING these robots before we’ve adequately addressed these concerns... that bothers me.

My Actual Position (Because Nuance Matters)

Look I don’t want to come across as a Luddite here. I’m genuinely excited about humanoid robotics. The engineering is impressive. The potential benefits are real. The possibility of helping elderly people maintain independence, reducing the burden of household labor, enabling people with disabilities to live more autonomously... these are worthy goals.

And NEO specifically has done A LOT right:

The safety-first design philosophy is commendable

The tendon-drive system is genuinely innovative

The soft body and light weight reduce injury risk

The OpenAI backing provides strong AI capabilities

The pricing is reasonable compared to competitors

They’re first to market with an actual purchasable product

But that Expert Mode feature... man. That’s where they lose me.

I understand the reasoning. I understand that it’s a practical solution to the autonomy gap. I understand that it enables data collection for training. I even understand why some early adopters might be okay with the trade-off.

But calling it “cringe” isn’t just being flippant. It’s recognizing that we’ve created a system where:

You pay premium prices for incomplete autonomy

You surrender significant privacy for the privilege

You become unpaid training infrastructure

You accept risks that aren’t fully understood yet

That’s not a good deal. That’s not ready for prime time. That’s rushing to market because there’s competitive pressure and investor expectations and a desire to be “first” even if “first” means “not quite ready.”

What Ready Actually Looks Like

If I were designing the rollout of home humanoid robots (and thankfully I’m not because this is complex as hell), here’s what I think “ready” would look like:

Technical Readiness:

90%+ autonomous operation for common household tasks

Graceful failure modes that don’t require human intervention

Robust safety systems with multiple redundancies

Proven reliability over thousands of hours of operation

Clear performance metrics and honest capability reporting

Privacy Readiness:

On-device processing only (no cloud requirements for basic operation)

User-controlled data retention policies

Open-source security auditing

Complete transparency about what data is collected and why

Opt-in rather than mandatory data sharing

Economic Readiness:

Price points that make it accessible beyond early adopters

Proven ROI demonstrating genuine value

Sustainable business models that don’t depend on data monetization

Fair compensation for any human operators involved

Clear total cost of ownership including maintenance

Social Readiness:

Public discourse about implications and trade-offs

Regulatory frameworks that protect consumers

Cultural norms around robot presence in homes

Educational resources about capabilities and limitations

Support systems for addressing issues

Ethical Readiness:

Industry standards for privacy and security

Independent oversight and accountability

Transparent disclosure of limitations and risks

Consumer protection regulations specifically for home robotics

Clear liability frameworks for when things go wrong

We’re not there yet. We’re not even close.

The Path Forward (What I Actually Want To See)

So what do I think should happen? Because it’s easy to criticize but harder to propose solutions...

For Companies:

Start with LIMITED deployments in controlled environments. Not thousands of homes. Not even hundreds. Maybe dozens of carefully selected beta testers who genuinely understand the risks and limitations.

Be HONEST about autonomy levels. Don’t market partially-autonomous robots as if they’re fully capable. Use clear language about what requires teleoperation and why.

Invest in TRUE autonomy before widespread deployment. The Expert Mode should be a temporary bridge, not a permanent feature. Set clear timelines for when systems will operate independently.

Prioritize privacy by default. Make teleoperation opt-in rather than fundamental to the system. Develop autonomous capabilities even if they’re slower or less capable initially.

For Regulators:

Create frameworks BEFORE widespread adoption, not after. We need privacy protections, safety standards, liability rules, and consumer protections specifically for home robotics.

Require transparency in marketing. If a robot needs remote human operation, that should be prominently disclosed, not buried in terms of service.

Establish independent testing and certification. Like how cars need crash testing, home robots should need safety and privacy certification.

For Consumers:

Ask hard questions before buying. Don’t get swept up in hype. Understand what you’re actually getting and what trade-offs you’re accepting.

Demand transparency. Companies should clearly explain how systems work, what data is collected, who has access, and what security measures exist.

Start with industrial applications. Let the technology mature in controlled environments before bringing it home.

Why I’m Still Hopeful (Despite Everything)

Okay so after all that criticism and concern, let me be clear about something... I’m not opposed to this future. I’m not saying humanoid robots in homes is a bad idea fundamentally.

I’m saying we’re not ready YET. And rushing to market with systems that require compromises like Expert Mode suggests we’re prioritizing speed over safety, first-mover advantage over genuine readiness.

But the trajectory is clear. AI capabilities are improving rapidly. The hardware is getting better. Companies are learning from deployments. The market is maturing.

I genuinely believe that within 5-10 years we’ll have humanoid robots in homes that work well, operate autonomously, respect privacy, and provide real value. The engineering challenges are solvable. The AI limitations are temporary. The economic barriers will come down.

What concerns me is the transition period. The time between “barely works” and “genuinely useful.” That’s where we are now. And that’s where companies face pressure to deploy systems that aren’t quite ready because they need to demonstrate progress to investors, gather training data, and establish market position.

I get it. I understand the competitive dynamics. But that doesn’t make it right.

What This Means For My Own Work

This whole exploration has made me think differently about my own AI experiments...

When I wrote about building internal digital solutions fast and about being your own technical co-founder, I emphasized speed and iteration. Build quickly, test, learn, improve. That’s the right approach for digital products.

But the same philosophy applied to physical AI in intimate spaces? That needs more caution. More careful consideration of implications. More honesty about limitations.

My AI e-commerce automation experiment is happening in digital space. If it fails, no physical harm occurs. No privacy is violated. The blast radius is contained.

But if I were building physical robots for homes? I’d want to be absolutely certain about safety, privacy, and autonomy before deploying. Even if it meant being slower to market. Even if competitors moved faster.

There’s a difference between moving fast and breaking things in software versus moving fast with physical systems in people’s homes. The consequences are just fundamentally different.

The Bottom Line (Because You Made It This Far)

Look... NEO represents impressive engineering and ambitious vision. 1X Technologies is pushing boundaries and attempting something genuinely difficult. I respect that.

But Expert Mode reveals we’re not ready yet. Not for widespread home deployment of humanoid robots that need remote human operation for many tasks.

The privacy trade-offs are too significant. The autonomy limitations are too real. The risks are too uncertain. The societal implications haven’t been adequately addressed.

This should still be in beta. In controlled testing. In industrial applications where safety and privacy can be properly managed. Not in homes. Not yet.

When I look at NEO, I see a glimpse of an amazing future... but also a warning about rushing to that future before we’re ready.

Maybe that’s overly cautious. Maybe I’m overthinking this. Maybe early adopters who buy NEO will have wonderful experiences and look back at these concerns as needless worry.

But I’d rather be cautious with physical AI in homes than reckless. I’d rather wait for genuine autonomy than accept remote surveillance as the price of progress.

The future of humanoid robots in homes is coming. It’s exciting. It’s transformative. It’s inevitable.

But let’s make sure we get there thoughtfully, with proper safeguards, with honest assessment of readiness, and with genuine respect for privacy and autonomy.

Because once we invite these systems into our homes, once we normalize their presence, once we accept the trade-offs... it’s really hard to put that genie back in the bottle.

Your Turn

What do you think? Am I being too paranoid about privacy? Too critical of a genuinely innovative product? Or do you share these concerns about physical AI systems that require remote human operation?

Have you followed the development of NEO or other humanoid robots? Does Expert Mode bother you or does it seem like a reasonable approach to the autonomy problem?

And bigger picture... do you think we’re ready as a society to integrate humanoid robots into our homes? What would make YOU comfortable with having one?

Drop a comment below because I’m genuinely curious how others are thinking about this inflection point. Are we rushing too fast or am I being overly cautious?

PS. How do you rate today’s email? Leave a comment or “❤️” if you liked the article - I always value your comments and insights, and it also gives me a better position in the Substack network.