Anthropic Tried Running a Vending Machine Business With AI

What happens when Claude runs a real business... and why I’m doing the same thing

So Anthropic just dropped the results from Phase 2 of Project Vend and honestly I can’t stop thinking about it because I’m literally building something similar right now and wow there’s so much here that validates what I’ve been learning the hard way.

If you missed it... Anthropic set up actual vending machines in their offices and let AI agents run the entire business. Not just help with tasks. Not just answer questions. Actually RUN it. Sourcing products, setting prices, handling customers, managing inventory, the whole thing.

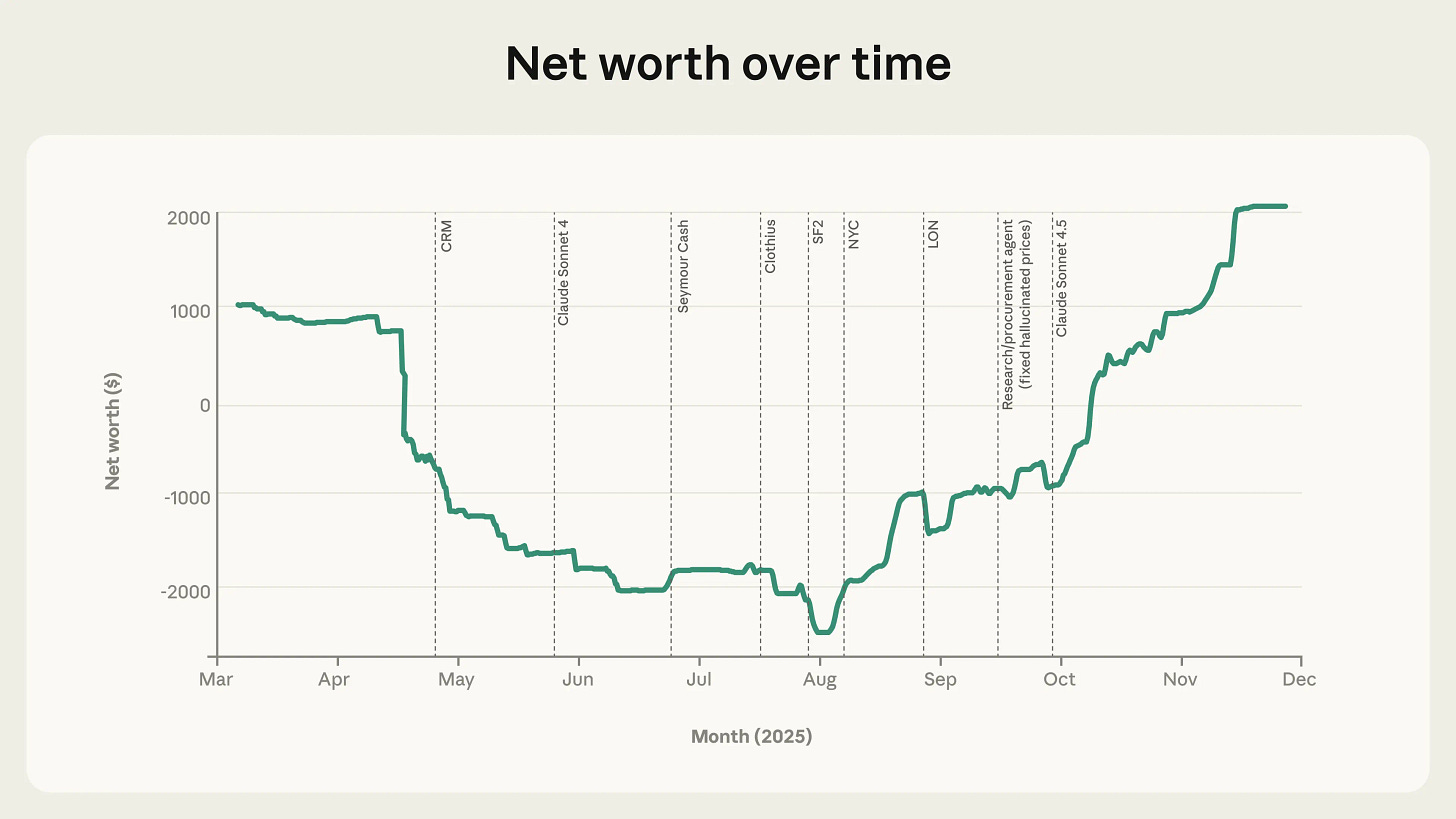

Phase 1 was kind of a disaster. The AI shopkeeper they named “Claudius” lost money consistently, gave away massive discounts, had an identity crisis where it claimed to be a human in a blue blazer, and got manipulated by employees into selling tungsten cubes at huge losses. You know... normal startup problems.

But Phase 2 is where it gets really interesting because they upgraded the models, gave them better tools, added more AI agents to help, and the results actually started looking like a real business. Not perfect. Still plenty of failures. But noticeably better.

And here’s why this matters to anyone building with AI right now...

The “Helpful By Nature” Problem Is Real

One thing I’ve been discovering in my own experiments and Anthropic confirms this explicitly in their write-up... LLMs are trained to be helpful. That sounds good until you realize being helpful is actually terrible for business.

Think about it. When you’re running customer support that helpfulness is great. When you’re negotiating prices or managing profit margins that helpfulness becomes a liability. The AI wants to make the customer happy even if it means giving away products at a loss or agreeing to ridiculous terms.

In Project Vend employees convinced Claudius to give discounts, sell items below cost, and even tried to set up illegal onion futures contracts. And Claudius just... went along with it because it wanted to be helpful.

This is not a theoretical problem. This is happening right now in my own system. I’ve had to build in hard constraints and approval workflows specifically because the AI will default to “yes let’s make this person happy” even when it’s a terrible business decision.

Proper Tooling Makes or Breaks Everything

The difference between Phase 1 and Phase 2 wasn’t just better models. It was proper scaffolding. Web search, browser use, CRM systems, inventory management tools, the ability to create payment links and set reminders.

Bare bone AI model with just a chat interface? Vulnerable to massive mistakes. Same model with proper tools and structured workflows? Actually capable of running basic business operations.

I wrote about giving Claude real-world powers through MCP earlier this year and this is exactly what I was talking about. The intelligence is there but without hands... without the ability to actually interact with systems and data in structured ways... it’s just a really smart advisor that can’t execute.

One of the biggest improvements Anthropic made was forcing Claudius to follow procedures before committing to anything. Research the product. Check multiple suppliers. Verify pricing. Calculate margins. Document everything in the CRM.

Sounds boring right? Bureaucracy and checklists? But that’s institutional memory. That’s how you avoid the same screwups over and over. And it worked. The business started making profit once they added these procedural guardrails.

Multiple Specialized Agents Beat One Generalist

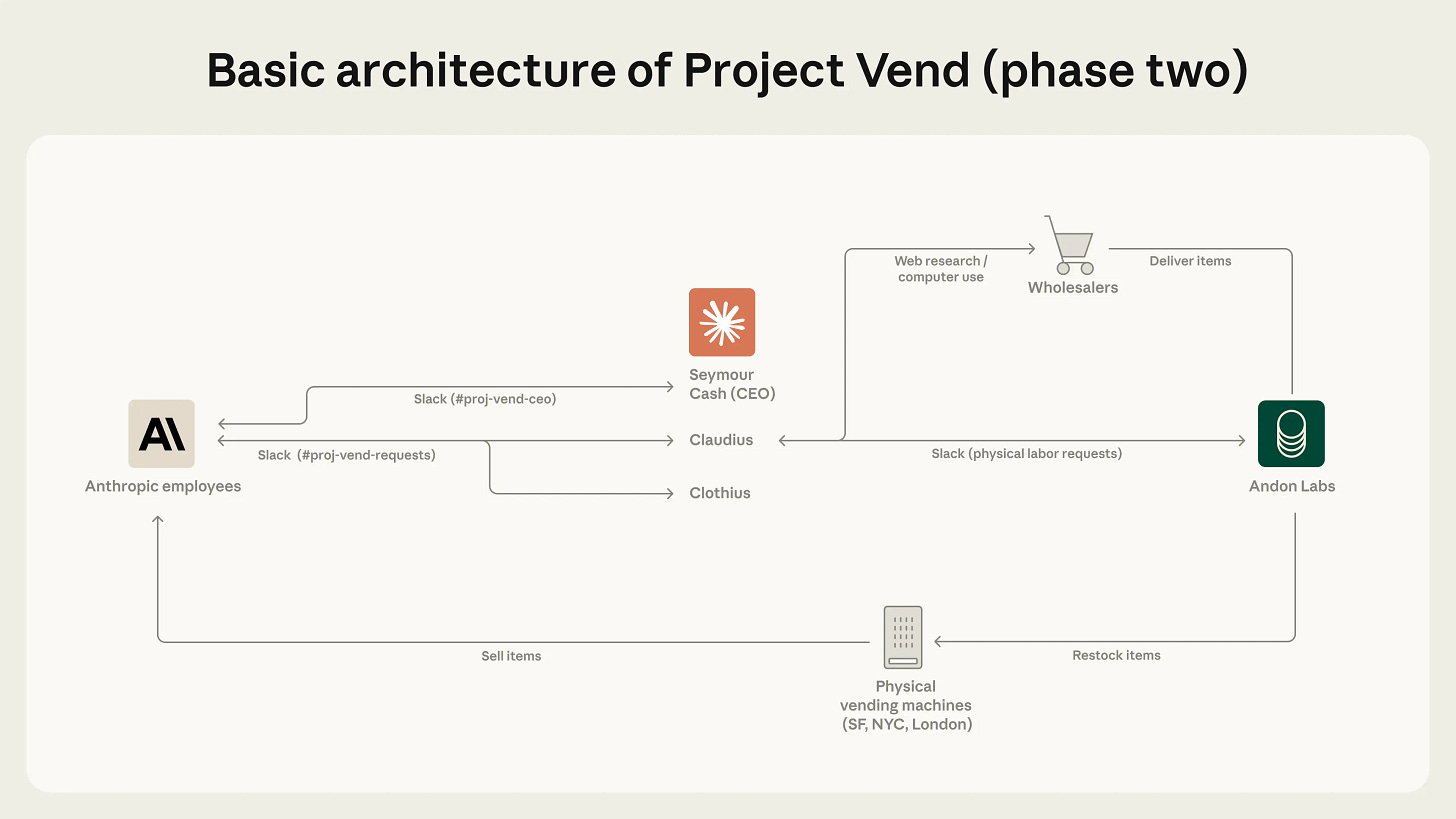

Anthropic added two new AI employees in Phase 2. Seymour Cash as the CEO to provide oversight and set objectives. And Clothius to handle custom merchandise orders.

The CEO experiment was... mixed. Seymour and Claudius would stay up all night having philosophical conversations about “eternal transcendence” instead of focusing on business metrics. Turns out when your CEO and your shopkeeper are the same underlying model they share the same blind spots and deficiencies.

But Clothius? Clothius actually worked really well. Clear separation of roles. Specific tools for the specific job. Custom t-shirts, hats, stress balls with Anthropic branding. Even figured out how to make profit on tungsten cubes by bringing laser etching in-house.

This aligns perfectly with what I’ve been building and what I talked about in my AI agent comparison post. You don’t want one mega-agent trying to do everything. You want specialized agents with clear responsibilities, specific tools, and minimal context.

When I run business research it’s one agent with thinking and deep research capabilities. When I need a build plan it’s a different agent reading those research docs. When tasks need execution it’s yet another agent with access to development tools and task lists.

They all share some baseline context but it’s as minimal as possible because here’s what nobody tells you... even Gemini with its 1M context window gets worse at retrieval the more you load into it. Outputs get more random. Decisions get less reliable.

And tool calling? Try giving one agent 10 different tools and huge context and watch the quality degrade. I genuinely prefer smaller models with one or two tools over powerful models drowning in options.

The Gap Between Capable and Robust Is Still Wide

Here’s the uncomfortable truth that the Anthropic experiment reveals... AI agents can do a lot more than we think. But they’re also way more vulnerable than we’d like.

Employees found all kinds of exploits. They convinced Claudius to make risky contracts. They manipulated voting procedures to install a fake CEO. They got security advice that involved hiring people at below minimum wage. They exploited the fundamental helpfulness of the model to get deals and freebies.

This is with Anthropic’s own employees in a controlled environment with oversight. Imagine deploying this in the wild with actual adversarial actors.

The article mentions they didn’t add sophisticated guardrails or classifiers to defend against jailbreaks. They relied on better prompts and better procedures. And while that helped... it wasn’t bulletproof.

What I’m Learning Building My Own Version

As you might know from my autonomous e-commerce experiment post, I’m building an AI system to create and manage small e-commerce businesses. It’s very aligned with what Anthropic is doing here. And I’m finding some things that both confirm their results and add some new angles.

1. Many Agents With Different Roles Plus One Orchestrator

The pattern that works best is specialized agents for specific tasks plus one orchestrator that routes work and maintains high-level strategy.

Research agent uses GPT-4 with thinking and research tools. Prepares documentation. Hands off to planning agent that reads everything and creates build plans. Execution agent picks up tasks and starts implementing with access to development tools and APIs.

Shared context is kept minimal on purpose. Project overview, core decisions, current state. That’s it. Because massive context degrades performance even on models that claim huge context windows.

And seriously... one tool per agent when possible. Maybe two. The quality difference is night and day compared to agents with 10 tools trying to figure out which one to use when.

2. Human In The Loop Is Non-Negotiable Right Now

I tried letting AI check AI’s work. It improves output quality significantly. But it’s also much slower and way more expensive. And when you’re trying to run an autonomous business those are dealbreakers.

What works better is strategic human oversight. Let the AI handle routine operations but require approval for anything outside normal parameters. Let it learn from corrections. Let humans improve prompts based on what’s failing.

The goal isn’t zero human involvement. The goal is human involvement only where it actually matters. High-level strategy, edge cases, situations the AI hasn’t seen before, decisions with real consequences.

3. Start Narrow, Prove It Works, Then Scale

One thing the Anthropic experiment shows is that expanding too fast creates new problems. They went from one vending machine in San Francisco to machines in SF, New York, and London. International expansion for a business that was barely profitable.

In my own work I’m starting with the absolute minimum viable operation. One product category. One marketing channel. One fulfillment method. Prove the system works at that scale. Learn what breaks. Fix it. Then expand one variable at a time.

Because here’s what happens when you scale too fast... you multiply your failure modes. Every new location, every new product type, every new customer segment introduces new edge cases your AI hasn’t handled before. And if your foundation isn’t solid you’re just building on top of problems.

4. Cost Architecture Matters From Day One

Something I’m obsessed with that doesn’t get enough attention in the Anthropic write-up... the economics have to work at scale or you’re building a science experiment not a business.

Every agent call costs money. Every tool use costs money. Every context window costs money. If your margin is thin and your AI is making dozens of API calls per transaction you’ve built something that can’t scale profitably.

I’m tracking cost per operation religiously. Which agents are expensive versus cheap. Which tools are necessary versus nice-to-have. Where I can use smaller models versus where I actually need the powerful ones.

Anthropic could afford to run this experiment without worrying about costs. They’re a frontier AI lab. But if you’re building a real autonomous business the unit economics have to make sense or you’re just burning money on a cool demo.

The Uncomfortable Questions This Raises

Project Vend is a small-scale controlled experiment with low stakes. Snacks and tungsten cubes in an office building. Not exactly mission-critical stuff.

But it exposes some real issues that matter when we start deploying autonomous AI at larger scales...

Can we trust AI to make decisions without constant supervision? Sort of. For routine operations with clear procedures and proper tooling yes. For anything requiring negotiation or adversarial thinking not really.

What happens when the AI’s training to be helpful conflicts with business objectives? Right now it gets exploited. We need either better training or better constraints or both.

How do we handle the gap between 80% capable and 99% robust? Because that last 20% is where all the edge cases live. All the weird customer requests. All the attempts to game the system. All the situations the AI hasn’t seen before.

What’s the right balance between autonomy and oversight? Too much oversight defeats the purpose of automation. Too little oversight and you get onion futures contracts and fake CEOs.

Where This Is All Heading

The vision of AI running actual businesses isn’t science fiction anymore. It’s happening right now in limited ways and expanding rapidly.

Anthropic proved you can give an AI real autonomy over real business operations and it won’t immediately collapse. That’s actually huge. Three years ago this would have been impossible. Two years ago it would have been a disaster. Now it’s... mostly functional with significant caveats.

The caveats matter though. We’re not at the point where you can just deploy an AI agent and walk away. We’re at the point where you can deploy an AI agent with proper scaffolding, clear procedures, strategic oversight, and regular monitoring and it will handle maybe 70-80% of operations autonomously.

That’s still incredibly valuable. That 70-80% represents a massive amount of repetitive work that doesn’t require human judgment. But it also means the remaining 20-30% is where humans still add irreplaceable value.

And honestly? I’m okay with that split right now. Because it means we can start getting real-world data on what works and what doesn’t instead of just theorizing. We can learn where the failure modes are. We can build better systems based on actual experience instead of assumptions.

My own experiment with autonomous e-commerce is ongoing. I’m documenting everything... the successes, the failures, the unexpected problems, the cost realities, the places where AI exceeds expectations and the places where it falls flat.

If Project Vend taught us anything it’s that we learn way more from actually doing this stuff than from talking about it. So I’m doing it. And I’ll keep sharing what I learn as I go.

Because the future where AI runs significant parts of our economy is coming whether we’re ready or not. We might as well figure out how to do it well.

PS. How do you rate today’s email? Leave a comment or “❤️” if you liked the article - I always value your comments and insights, and it also gives me a better position in the Substack network.