When AI Writes Code, What Should Schools Teach?

Education is important, but old

So here’s a question that’s been rattling around in my head since I started using Claude Code daily: what exactly is the point of teaching someone to write a for-loop when an AI can generate one in half a second?

I’m not being flippant. This is a genuine question that computer science programs, bootcamps, and anyone teaching themselves to code needs to wrestle with right now. Not in five years. Now.

Because here’s the uncomfortable truth - the AI coding tools available today aren’t just helping beginners write better code. They’re writing code that’s often better than what most beginners would produce after months of practice. That changes everything about how we think about technical education.

The Shift I Didn’t Expect

I’ve written before about my late-night coding adventures with AI tools. Building apps at 2 AM, shipping things in hours that would have taken days. And more recently, I did a deep dive into Claude Code specifically - testing it against other tools, seeing what it could actually do versus the hype.

What changed wasn’t just speed. It was my relationship with code itself.

I used to think of programming as a skill with clear levels. Beginner writes messy code. Intermediate writes cleaner code. Expert writes elegant, optimized code. You climb the ladder by writing more code, reading more code, failing more times.

That model feels broken now.

Because with Claude Code, I can describe what I want in plain English and get production-quality code. Not always - it messes up, it misunderstands, it occasionally hallucinates entire APIs that don’t exist. But increasingly, the bottleneck isn’t my ability to write the code. It’s my ability to know what code should exist in the first place.

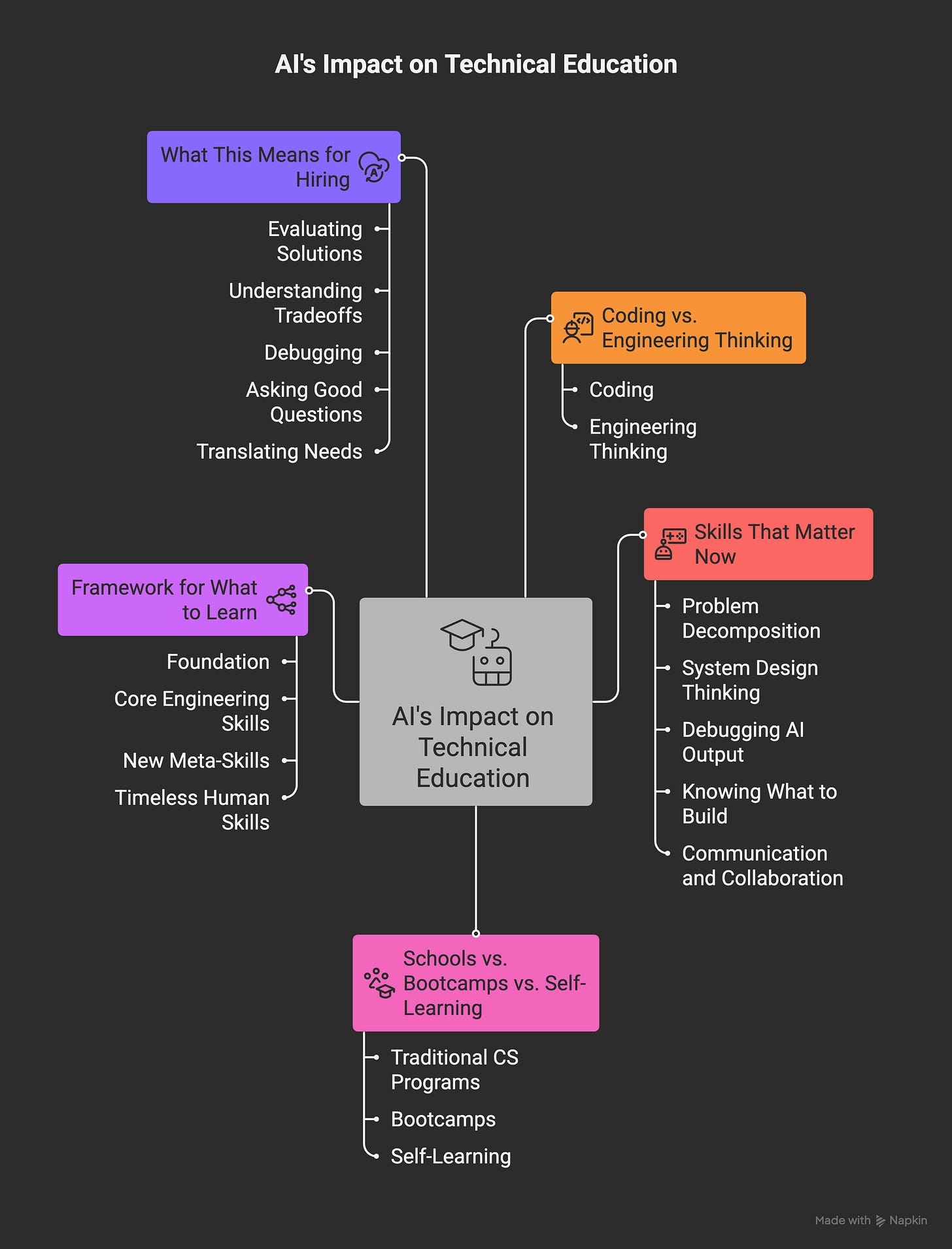

Coding vs. Engineering Thinking

Let me draw a distinction that I think matters more than ever.

CODING is syntax. It is knowing that Python uses colons and indentation while JavaScript uses curly braces. It is remembering whether arrays start at 0 or 1 in your language of choice. It is muscle memory for writing loops, conditionals, function definitions.

ENGINEERING THINKING is different. It is understanding why you would choose a database over a flat file. It is knowing when to split a monolith into microservices (and when that is a terrible idea). It is recognizing that the elegant solution requiring three API calls might be worse than the ugly solution requiring one.

AI is getting scary good at coding. It is still pretty bad at engineering thinking.

And here is the irony that keeps hitting me: to effectively use AI coding tools, you might need to understand code more deeply than ever before. Not to write it - but to evaluate it, debug it, know when it is wrong, know what questions to ask.

I have seen this firsthand. When I prompt Claude Code with vague instructions, I get vague results. When I prompt with architectural clarity - “I need a React component that does X, using Y pattern, because Z constraint” - the output is dramatically better. The quality of AI output directly correlates with the engineering sophistication of the input.

What Schools Currently Teach (And Why It Made Sense)

Traditional CS curricula make sense if you assume humans will write most code. You start with syntax. Then data structures. Then algorithms. Then design patterns. Each layer builds on the previous one.

The logic was: you need to understand how a linked list works before you can decide when to use one. You need to know Big O notation before you can optimize a slow function. You need to write bad code before you learn to write good code.

And honestly? That logic still has merit. Understanding fundamentals matters. You cannot debug what you do not understand.

But here is my question: how much time should be spent on the fundamentals versus the higher-order skills that AI cannot (yet) replicate?

If a student spends their first two years mastering syntax and basic algorithms, and AI tools are handling most of that work in industry, are we preparing them for jobs that will exist when they graduate?

The Skills That Actually Matter Now

After months of using AI coding tools daily, here is what I think actually differentiates people who ship good software:

Problem decomposition

AI cannot tell you what problem to solve. It cannot look at a business and identify where technology would add value. It cannot break a vague “we need to improve customer experience” into specific, buildable features. This is the skill. Being able to take fuzzy human needs and translate them into concrete technical requirements. Schools barely teach this.

System design thinking

How should components talk to each other? Where does data live? What happens when this fails? What happens at scale? AI can generate individual components. It struggles with the big picture. When I ask Claude Code to build a feature, it builds the feature. It does not automatically consider how that feature interacts with authentication, caching, logging, monitoring. That is still my job.

Debugging AI output

This is a new skill that did not exist two years ago. AI-generated code often works. But when it does not, finding the bug requires understanding the code well enough to read it critically. Worse, AI sometimes generates code that appears to work but has subtle issues - security vulnerabilities, performance problems, edge cases that fail silently. You need enough coding knowledge to catch these.

Knowing what to build (and what not to)

The hardest part of software engineering was never writing code. It was deciding what code should exist. That has not changed. If anything, it is more important now that actually writing the code is cheap. I wrote about this in the context of career skills that AI cannot replace. The moat is not technical ability. It is judgment.

Communication and collaboration

Software is built by teams for humans. Understanding stakeholders, translating between technical and non-technical people, managing expectations - none of this is going away. If anything, as AI handles more implementation, the human skills around it become more valuable.

The Paradox: You Need to Know Code to Not Write Code

Here is what I keep coming back to.

The people who get the most out of AI coding tools are not the people who know nothing about code. They are people with enough understanding to: know what is possible and what is not, recognize when AI output is wrong or suboptimal, ask better questions that produce better outputs, debug and modify generated code when needed, and understand the architecture well enough to direct AI effectively.

It is like the difference between someone who cannot cook at all using a meal delivery service versus a chef using the same service. The chef might not cook every meal themselves, but they know when the delivery is good, they can modify it to their taste, and they understand what goes into making it.

So maybe the answer is not “stop teaching code.” Maybe it is “teach code differently.” Less time on syntax drills. More time on reading and evaluating code. More time on architecture and design. More time on the meta-skill of directing AI effectively.

Schools vs. Bootcamps vs. Self-Learning in the AI Era

Let me be practical about the different paths.

Traditional CS programs have time on their side. Four years is enough to teach fundamentals and build real depth. But they are also slow to change curriculum. If you are in a CS program right now, you are probably spending significant time on skills AI will handle by the time you graduate. My suggestion: supplement with real-world AI tool usage. Use Claude Code or Cursor for your projects. Learn what it is good at, what it is bad at. Build the meta-skill of AI collaboration while you are building fundamentals.

Bootcamps have always been about job readiness. They should probably be the fastest to adapt, but I am seeing mixed signals. Some are leaning into AI tools. Others are pretending they do not exist because their value proposition is “we will teach you to code.” The bootcamps that will survive are the ones that reframe their value: “we will teach you to build software” rather than “we will teach you to write code.” The distinction matters.

Self-learning is the wildcard. On one hand, AI tools make self-learning easier than ever. You can build real projects with AI assistance, learning as you go. On the other hand, without structure, it is easy to build a shallow understanding - you can ship things without really knowing why they work.

If you are self-learning, I would actually recommend this approach: build with AI, but then spend time reading the code it generates. Understand it. Ask the AI to explain it. Modify it. Break it and fix it. The meta-skill develops from this reflection, not just from shipping fast.

A Framework for What to Learn

Here is how I would think about it if I were starting from zero today:

FOUNDATION (still necessary, but compressed): Basic programming concepts (variables, loops, functions, data structures). Reading code fluently (more important than writing). One language well enough to understand AI output. Version control (git is not going away).

CORE ENGINEERING SKILLS (prioritize these): System design and architecture. Database concepts and data modeling. API design principles. Security fundamentals. Performance and scalability thinking.

NEW META-SKILLS: Prompt engineering for code generation. Evaluating and debugging AI output. Knowing when AI solutions are appropriate (and when they are not). Combining multiple AI tools effectively (I wrote about multi-model workflows and this applies to coding too).

TIMELESS HUMAN SKILLS: Problem decomposition. Communication with technical and non-technical stakeholders. Project management and estimation. Understanding user needs.

What This Means for Hiring

If I were hiring developers today - and this is speculative, I am just one person - I would care less about whether someone can write a sorting algorithm from memory. I would care more about: Can they evaluate solutions critically? Do they understand system tradeoffs? Can they debug something they did not write? Do they ask good questions? Can they translate business needs into technical direction?

The best developers I have worked with were never the ones who memorized the most syntax. They were the ones who understood problems deeply enough to know what solutions would work. AI does not change that - it actually makes it more visible.

The Uncomfortable Question

I will leave you with the question I keep asking myself.

If AI can already write code better than most bootcamp graduates, and it is getting better every month, what should someone learning to code today actually spend their time on?

I do not think the answer is “don not learn to code.” Code literacy will probably be like writing literacy - not everyone needs to be a novelist, but everyone benefits from being able to read and write.

But I also do not think the answer is “learn the same way we always have.” That feels like teaching penmanship in an era of keyboards. Valuable in some ways, but maybe not the best use of limited time.

The answer is somewhere in the middle. Less time on syntax, more on systems. Less time writing code, more time reading and evaluating it. Less focus on implementation, more on architecture and design. Less memorization, more judgment.

Schools will adapt eventually. They always do. The question is whether they will adapt fast enough for the people going through them right now.

And if you are one of those people - supplement your education with real AI tool usage. Build the meta-skill of collaboration with AI before you need it professionally. The students who do this will have a significant advantage over those who wait for the curriculum to catch up.

The tools have changed. What we teach should probably change too.

PS. How do you rate today email? Leave a comment or heart if you liked the article - I always value your comments and insights, and it also gives me a better position in the Substack network.

Regarding the topic of the article, this is a very incisive piece that accurately frames a significant pedagogical shift many of us in computer science education are experiencing. While AI excels at syntax generation, the fundametnal understanding of algorithms and computational thinking remains indispensable for critical evaluation and effective problem definition.