Memory in AI Chats is giving us more...personal touch? In what cost?

(is it bad, though?)

Hey digital adventurers... something fascinating has been happening in the AI space and I need to talk about it because it’s fundamentally changing how we interact with these tools

You know that moment when you open ChatGPT or Claude and it just... remembers things about you? Your preferences, your projects, that weird technical problem you were solving three weeks ago? That’s not just a cool feature. That’s a complete shift in what these tools actually ARE.

And honestly... it’s making us kind of attached to specific AI platforms in ways that feel almost personal.

Let me explain what I mean.

When ChatGPT Started Remembering (And Everything Changed)

Remember when ChatGPT first rolled out memory features? People started doing something really interesting... they’d ask ChatGPT questions about THEMSELVES.

“What do you know about my work?”

“What are my main projects right now?”

“Tell me about my preferences and habits”

It was almost like checking what an old friend remembers about you. And the responses? Often surprisingly accurate and detailed. ChatGPT had been quietly building a profile from all your conversations, noting patterns, preferences, work contexts, communication styles.

This wasn’t just novelty. This was people discovering that their AI assistant had become genuinely personalized in a way that felt... different. More intimate somehow.

And here’s where it gets really interesting from a behavior standpoint...

The Magnetic Effect of Context

Once an AI system knows enough about you, switching platforms becomes genuinely difficult. Not because of technical lock-in or subscription costs... but because you’d have to rebuild all that context somewhere else.

Think about what happens when you’ve been working with Claude for months on your e-commerce business. It knows:

Your business model and goals

Your technical skill level and gaps

Your communication preferences

Your recurring challenges

Your past solutions and what worked

Now imagine someone says “hey try this other AI platform, it’s technically better”

You probably think... but does it know about my Shopify integration challenges? Does it understand my automation workflow? Does it remember that debugging conversation from last Tuesday that finally solved that inventory sync issue?

The context accumulation creates gravity. The more conversations you have, the more the AI understands your specific situation, the harder it becomes to leave.

This is exactly what I’ve been experiencing with my AI-orchestrated e-commerce system [https://thoughts.jock.pl/p/ai-orchestrated-ecommerce-autonomous-business-experiment-2025]. The AI isn’t just executing commands... it’s building understanding of the entire business context over time.

From Tool to Partner (And Why That Matters)

Here’s what’s really happening under the surface...

Traditional software tools are stateless. Every time you open Excel or Photoshop, you start fresh. Maybe you have saved files, but the tool itself doesn’t remember YOU.

AI with memory is fundamentally different. It’s more like working with a colleague who’s been on your team for months. They remember past discussions, understand your preferences, anticipate your needs.

When I wrote about the AI integration wars [https://thoughts.jock.pl/p/ai-integration-wars-claude-chatgpt-gemini-connected-intelligence-2025], I talked about how we’re moving from isolated chatbots to connected intelligence. Memory is a huge part of that shift.

But it goes deeper when you start adding MORE context through tools and integrations...

The Compounding Power of Context Layers

Memory alone is powerful. But when you combine memory with:

Access to your files and documents

Integration with your work tools

Understanding of your code repositories

Knowledge of your business data

Connection to your communication platforms

Now you’re building something that feels less like “using a tool” and more like “working with someone who genuinely understands your entire digital ecosystem”

This is what I discovered when setting up MCP with Claude Desktop [https://thoughts.jock.pl/p/claude-desktop-mcp-setup-guide-real-world-integration-tools]. Once Claude could access my actual files, my GitHub repos, my project documentation... combined with the conversation memory it had built... suddenly it wasn’t just answering questions. It was actively understanding my work in context.

Same with my Dynamic Claude Chat experiments [https://thoughts.jock.pl/p/dynamic-claude-chat-automation-guide]. When the AI can pull from constantly updated knowledge sources AND remember our previous interactions... the quality of assistance goes way up.

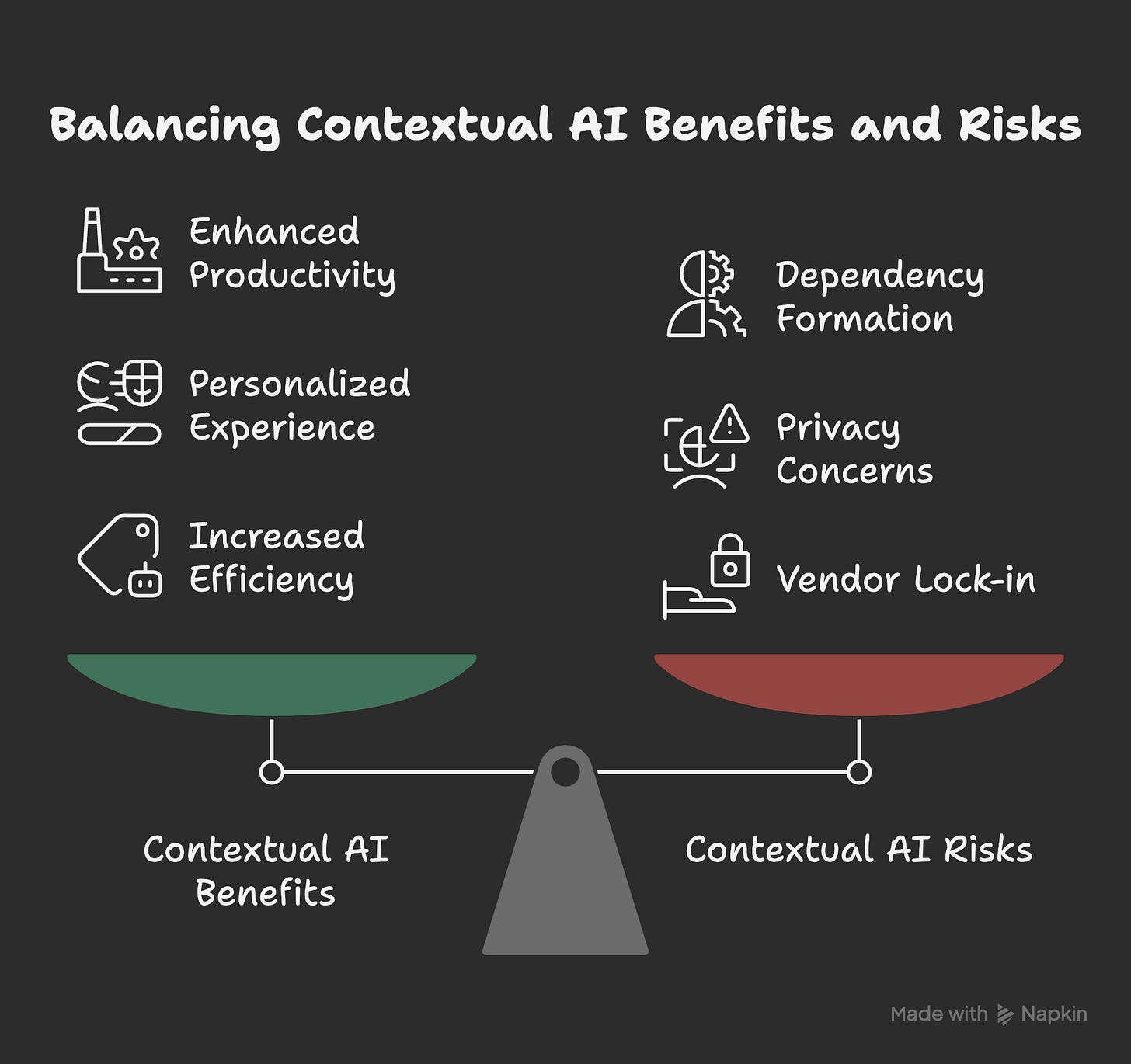

The Uncomfortable Questions Nobody’s Really Asking

But let’s be real for a second... this raises some genuinely tricky questions:

Privacy and Control:

What exactly is being remembered? Where is it stored? Can you actually delete it? The answer varies wildly by platform and most people don’t really know what data their AI assistant has accumulated about them.

Vendor Lock-in Through Behavioral Design:

Is this memory feature genuinely helpful... or is it primarily a retention mechanism? Both can be true, but we should be honest about the incentive structures here.

The Authenticity Question:

When an AI “remembers” you... what does that actually mean? It’s pattern matching and data retrieval, not genuine memory. But it FEELS personal. That gap between mechanism and experience is worth examining.

Dependency Formation:

Are we building helpful assistants or creating artificial dependencies? If you genuinely struggle to work effectively without your AI assistant because it knows so much context... is that empowerment or subtle addiction?

I don’t have clean answers to these questions. But they matter as we collectively figure out healthy relationships with increasingly personalized AI systems.

What This Means for How We Choose AI Tools

The memory effect is changing the calculation around which AI platform to use.

It used to be about:

Which has the best model performance?

Which has the features I need?

What’s the pricing?

Now it’s increasingly:

Which platform already knows my context?

Where have I built up conversational history?

What would I lose by switching?

This is why my hybrid approach with multiple AI agents [https://thoughts.jock.pl/p/ai-agent-comparison-claude-manus-zapier-real-testing-results-2025] is actually strategic. Different platforms for different contexts means you’re not creating a single point of dependency for ALL your contextual knowledge.

Manus for autonomous strategic reasoning. Claude for sophisticated content and analysis. Zapier for operational integrations. Each building their own contextual understanding of their specific domain.

Spreading your context across platforms has downsides (less integrated understanding) but also advantages (reduced lock-in, specialized expertise, redundancy if one platform changes).

The Future of Contextual AI Relationships

Where is this heading? A few predictions based on what I’m seeing...

Portable Context:

We’ll eventually need standards for exporting and importing your conversational context between platforms. Right now switching AI assistants means starting over. That won’t be sustainable long-term.

Selective Memory:

Better controls around what gets remembered versus forgotten. Some conversations should build long-term context. Others should be ephemeral. We need that granularity.

Shared Context Across Tools:

Imagine your calendar AI, your email AI, your coding AI, and your writing AI all sharing relevant context appropriately. That’s the direction we’re moving, though privacy and security challenges are enormous.

Context as Competitive Moat:

The AI platforms that figure out how to build the richest, most useful contextual understanding will have genuine advantages. This becomes a form of network effect... the more you use it, the better it works, the harder it is to leave.

Building Your Context Strategically

If you’re using AI tools seriously for work (and honestly, who isn’t at this point), think strategically about context accumulation:

Be Intentional About Platform Choice:

Where you build conversational history matters. Choose platforms with clear data practices and export capabilities.

Document Your Context:

Don’t rely solely on AI memory. Maintain your own knowledge base and documentation. The AI’s memory should augment yours, not replace it.

Use Projects and Workspaces:

Platforms like Claude’s Projects feature let you organize context by domain. This prevents everything bleeding together and makes context more portable.

Review What’s Remembered:

Periodically check what your AI assistant has accumulated about you. Most platforms offer ways to view and edit this, though admittedly it’s often buried in settings.

Build Redundancy:

Don’t put all your contextual eggs in one AI basket. Having multiple assistants with overlapping knowledge means you’re not completely stuck if one platform changes or fails.

The Honest Assessment

After months of working deeply with multiple AI platforms... memory and context accumulation is genuinely valuable. It makes these tools dramatically more useful for real work.

But it’s not purely positive. The stickiness is real. The dependency formation is real. The privacy implications are real.

The healthiest approach seems to be: embrace the benefits of contextual AI while maintaining awareness of what’s happening. Build rich context with platforms you trust, but don’t become so dependent that losing access would cripple your work.

Think of it like any relationship... intimacy and knowledge are valuable. But maintaining some independence and awareness is healthy too.

What’s Your Experience?

I’m genuinely curious about how you’re navigating this...

Are you finding yourself attached to specific AI platforms because of the context they’ve built? Have you tried asking your AI assistant what it knows about you? Have you successfully moved between platforms without losing critical context?

The conversation around AI is still mostly focused on capability and performance. But this behavioral dimension... how these tools shape our habits, preferences, and dependencies through memory... that might be just as important long-term.

Drop your thoughts in the comments. I’m especially interested if you’ve found good strategies for managing contextual AI relationships without getting locked into single platforms.

PS. How do you rate today’s email? Leave a comment or “❤️” if you liked the article - I always value your comments and insights, and it also gives me a better position in the Substack network.

I felt this deeply especially the part about the compounding power of conversations. That’s been the real shift for me. When an AI has lived through your projects, your patterns, your pivots the work stops feeling like isolated prompts and starts feeling like an ongoing collaboration.