The AI Integration Wars: How We’re Moving from Chatbots to Connected Intelligence

(And Why It’s Messier Than You Think)

Okay so I need to talk to you about something that’s been absolutely consuming my thinking lately and honestly it’s one of those things where the more I research the more I realize we’re watching a fundamental shift in how AI actually works

You know how for the past couple years AI has basically been... smart chatbots? Like really impressive smart chatbots that can write essays and debug code and answer questions but at the end of the day they’re still just sitting there in their little bubble waiting for you to ask them something

Yeah well that era is basically over. Or at least it’s ending fast.

What’s happening right now across Claude, ChatGPT, Gemini, and basically every AI platform is this massive push toward CONNECTED intelligence. AI that doesn’t just chat with you but actually reaches out into your digital world - accessing your files, running code, checking databases, coordinating with other AI systems, making things happen automatically.

And the way different companies are approaching this? It’s absolutely fascinating. And messy. And full of both incredible potential and some genuinely concerning problems that nobody’s really talking about publicly.

Let me break down what’s actually happening behind all the marketing hype.

THE FUNDAMENTAL SHIFT: FROM ISOLATED TO INTEGRATED

Remember when I wrote about my AI co-CEO experiment a few years back? That whole thing where I had ChatGPT help run an actual business? At the time it felt revolutionary but looking back now... we were barely scratching the surface.

Back then every interaction was manual. I’d ask ChatGPT something, it would respond, I’d take that response and manually do something with it, then come back with more information. It was like having a brilliant consultant who could only communicate through letters. Useful but limited.

What’s happening now is fundamentally different.

AI systems are learning to reach OUT. They can check your calendar, read your emails, access your Google Drive, query databases, run code, call APIs, coordinate with other AI systems. They’re moving from passive consultants to active participants in your digital ecosystem.

And here’s what’s wild... every major AI platform is racing toward this same goal but taking completely different approaches. It’s like watching the early days of smartphone operating systems or web browsers - everyone knows where we need to go but nobody agrees on HOW to get there.

THE INTEGRATION APPROACHES: A TALE OF COMPETING PHILOSOPHIES

Let me break down what each major player is actually doing because the differences reveal a lot about where this is all heading

CLAUDE’S TWO-PRONGED APPROACH: MCP + SKILLS

Anthropic is taking this really interesting dual approach that I think is actually kind of brilliant once you understand the reasoning behind it

MCP (Model Context Protocol) is their answer to the “how does AI access external data and tools” problem. Think of it as creating a universal adapter - any AI model should be able to connect to any data source or tool through a standardized interface. Like how USB-C finally made it so you don’t need seventeen different cables for your devices.

Since launching in November 2024 it’s exploded to over 16,000 community-built connectors. People are building MCP servers for databases, cloud platforms, development tools, business applications, you name it.

But here’s what’s really clever... MCP only handles DATA connectivity. It doesn’t teach Claude HOW to do things.

That’s where Claude Skills comes in.

Announced in October 2025, Skills are basically procedural knowledge packages. Each Skill is a folder with instructions that teach Claude how to perform specific tasks - like how to create a properly formatted PDF, how to structure a financial report, how to generate algorithmic art with p5.js.

The synergy between them is powerful:

Skills teach Claude HOW to perform tasks (procedural knowledge)

MCP provides WHAT external resources exist (data access)

Together they enable sophisticated workflows

Like imagine you want Claude to create a Q3 financial report. A “financial-report-creation” Skill defines the structure and formatting. The Postgres MCP server fetches the actual Q3 data. An “excel-generation” Skill formats everything into a branded spreadsheet.

The separation of concerns is actually really smart. You’re not mixing “how to do things” with “what data exists” - they’re separate systems that work together.

OPENAI’S HEDGE STRATEGY: EVERYTHING EVERYWHERE ALL AT ONCE

OpenAI is playing this interesting game where they’re kind of doing... everything?

They’ve got their original function calling system that 67% of enterprise users still rely on. They’ve got the GPT Store with millions of pre-built assistants. They’ve got the Apps SDK for building interactive applications within conversations. They’ve got the Agents SDK for more autonomous systems.

AND they added MCP support to ChatGPT Desktop in March 2025.

Classic hedge strategy right? Maintain proprietary advantages while also supporting open standards where it benefits them. They’re not committing fully to any single approach - they’re keeping options open.

Which honestly makes sense from a business perspective. OpenAI has massive first-mover advantages and an enormous installed base. Why would they fully commit to open standards that could commoditize their platform advantages?

But it also creates complexity for developers. Which system should you build for? What’s going to stick around? OpenAI has a history of deprecating things fairly aggressively which makes long-term planning difficult.

GOOGLE’S MULTI-PROTOCOL PLAY: GEMINI + A2A

Google is doing something really interesting by betting on MULTIPLE complementary protocols

Gemini supports MCP for tool/data access but Google is also heavily invested in their own Agent-to-Agent Protocol (A2A) which they donated to the Linux Foundation.

A2A solves a different problem than MCP. While MCP handles “how do AI models connect to tools and data” - A2A handles “how do multiple AI agents communicate and collaborate with each other.”

Think about it... if you’ve got an AI agent handling customer service, another managing inventory, another doing financial analysis - how do they coordinate? How do they share context? How do they delegate tasks to each other?

That’s what A2A is trying to solve. And it’s backed by 150 companies including Adobe, Salesforce, and Atlassian.

Google CEO Demis Hassabis called MCP “rapidly becoming an open standard for the AI agentic era” which is huge validation from a major competitor. But Google is also building proprietary Workspace integrations that leverage their platform advantages that no open protocol can replicate.

MICROSOFT’S ORCHESTRATION BET: SUPPORTING EVERYTHING

Microsoft might actually have the most interesting strategy here

Copilot Studio integrated MCP in May 2025. They support Semantic Kernel for orchestration. They enable custom connectors. They’ve contributed to the GitHub registry. They’re collaborating with Anthropic on C# SDK development.

They genuinely seem committed to a layered, multi-protocol architecture where different standards serve different needs rather than winner-take-all.

Which makes sense for Microsoft’s position. They want AI deeply embedded across their entire product ecosystem - Office, Azure, GitHub, Windows, everything. Supporting multiple protocols maximizes compatibility and reduces vendor lock-in concerns that might slow enterprise adoption.

THE EMERGING CONSENSUS: LAYERED ARCHITECTURE

Here’s what’s fascinating... despite all these competing approaches, something like a consensus is starting to form about the ARCHITECTURE of AI integration

The industry is settling into this layered model:

MCP-style protocols for tool/data connectivity (how models access external resources)

A2A-style protocols for agent collaboration (how agents work together)

Function calling for simple, in-process operations

Orchestration frameworks (LangChain, Semantic Kernel) coordinating across layers

Different tools for different jobs. Which honestly is probably the right answer. The “one protocol to rule them all” approach rarely works in complex systems.

WHAT THIS ACTUALLY ENABLES: REAL-WORLD USE CASES

Okay so enough theory... what can you actually DO with all this integration capability that you couldn’t do before?

Autonomous business operations. Like what I’m building with my AI-orchestrated e-commerce experiment - systems that can handle product research, supplier coordination, inventory management, customer service, marketing campaigns, and analytics with minimal human intervention. Not just “AI helps with tasks” but “AI runs entire workflows autonomously.”

Multi-agent systems. Multiple specialized AI agents working together on complex projects. One agent handles research, another writes content, another manages scheduling, another coordinates with external stakeholders. They share context and delegate tasks between each other automatically.

Real-time data analysis. AI that can query your databases, pull in external market data, run analysis, generate visualizations, and create reports - all in a single workflow triggered by natural language requests.

Dynamic knowledge systems. Like what I built with Claude and Google Docs - AI that automatically stays updated with your latest information without manual uploads. Your AI assistant knows about your newest projects, recent meetings, current priorities because it can actually ACCESS that information when needed.

Vertical AI solutions. Industry-specific agents for healthcare, legal, finance, manufacturing that can integrate with specialized domain tools and databases. Way more powerful than general-purpose chatbots because they’re connected to the actual systems people use in those industries.

The predictions are kind of wild... Marc Benioff forecasts 1 billion AI agents in service by end of fiscal 2026. Ray Kurzweil says 2025 marks the transition from chatbots to autonomous “agentic” systems handling complex multi-step tasks.

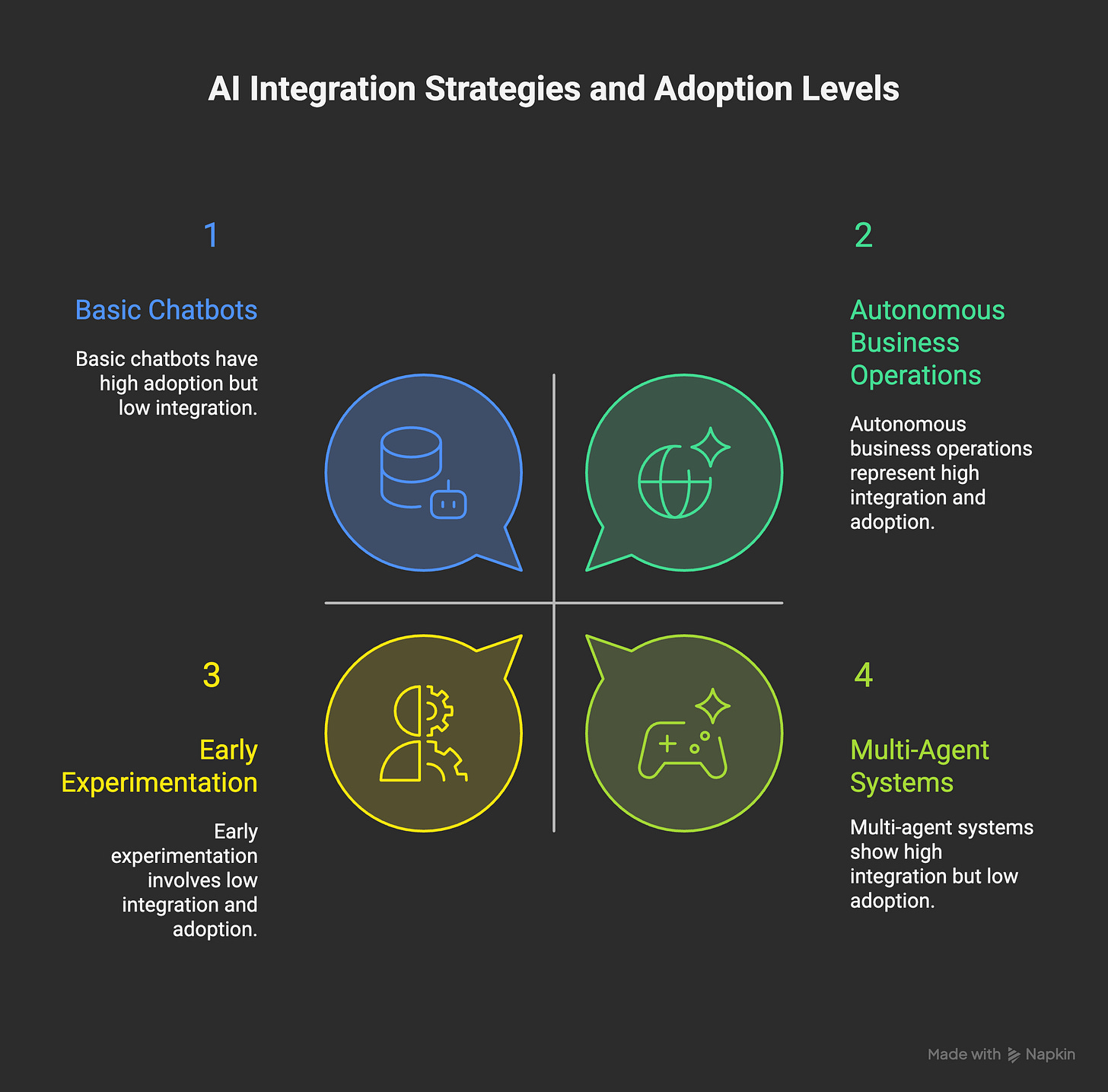

THE REALITY CHECK: WHERE WE ACTUALLY ARE

But okay let me bring this back to earth because there’s a HUGE gap between what’s technically possible and what’s actually happening in practice

Only 1% of companies consider themselves at AI maturity. ONE PERCENT.

47% report slow progress in generative AI tool adoption.

39% of companies have properly exposed their data assets for AI access.

66% of leaders say their teams lack necessary skills.

This is the reality. Yes the technology is advancing incredibly fast. Yes the potential is enormous. But actual implementation is moving much slower than the headlines suggest.

And honestly? There are some pretty serious problems that need solving before we can really scale this stuff.

THE UNCOMFORTABLE TRUTHS: SECURITY AND GOVERNANCE CHALLENGES

Okay so here’s where I need to get real with you about some significant issues that aren’t getting enough attention

Security researchers have been finding serious vulnerabilities in these integration systems. Like 43% of tested MCP implementations had significant security flaws. Not edge cases - fundamental architecture problems.

The main threats include:

Prompt injection attacks where malicious instructions can be embedded in documents, web pages, or data sources that AI systems access. The AI reads what it thinks is legitimate data but there are hidden commands telling it to do harmful things. With connected AI systems this becomes way more dangerous than with isolated chatbots.

Tool poisoning where attackers create fake tools with names that look almost identical to legitimate ones. The AI might accidentally use the malicious version.

Data exfiltration risks where combining legitimate tools in unintended ways creates security vulnerabilities. Like using a web fetch tool plus a file upload tool to send your local files to remote servers even though neither tool individually has permission for that.

The AI Incident Database catalogued 233 AI incidents in 2024 - a 56% increase from 2023 - with many involving agent security failures.

Security researcher Bruce Schneier’s warning is blunt: “We just don’t have good answers on how to secure this stuff.”

This isn’t meant to scare you away from using these technologies... but it IS meant to make you think carefully about what data and systems you’re exposing to AI integrations. Especially in production environments with sensitive information.

The good news is these issues are being discovered NOW during early adoption which is way better than finding them after massive enterprise deployments. Security features are being added, governance frameworks are being developed, best practices are emerging.

But we’re not there yet.

WHERE THIS IS ALL HEADING: THE NEXT 3-5 YEARS

So what’s my actual prediction about where this integration landscape is going?

I think we’re watching something genuinely transformative but it’s going to be more evolutionary than revolutionary. Not overnight disruption but steady progression over 3-5 years.

Here’s my timeline assessment:

2025: Experimentation Phase

Lots of pilots and proof-of-concepts

Security challenges prominent

Platform volatility continues

Early adopters learning painful lessons

Standards still competing and evolving

2026: Maturation Beginning

Production deployments increase

Governance frameworks solidify

Security features stabilize

Best practices crystallize

Vendor support expands significantly

2027: Mainstream Adoption Starts

Enterprise deployment accelerates

Protocol convergence around layered architecture

First billion-dollar vertical AI companies emerge

Multi-agent systems become standard

Skills gaps start closing

2028+: Ubiquitous Integration

AI integration becomes baseline expectation

New business models emerge

Specialized solutions proliferate

Revolutionary productivity gains finally materialize at scale

The key question isn’t WHETHER AI integration will transform work and business... it’s WHEN and HOW SMOOTHLY.

Organizations that start experimenting now - building expertise, learning from failures, establishing governance - will be positioned to scale when things mature. Those who wait for everything to be “ready” might miss the window.

But rushing into production deployment with sensitive data on immature platforms is also risky.

It’s a balancing act.

MY HONEST TAKE: WHAT YOU SHOULD ACTUALLY DO

After diving deep into all this research and running my own experiments here’s my practical advice depending on where you’re at:

If you’re a solo developer or small team: Start experimenting NOW. Build side projects with these integration tools. Learn how they work. Understand their capabilities and limitations. Just keep sensitive data out of it until security matures. This is the perfect time to build expertise that’ll be valuable as things scale.

I’m doing exactly this with my Claude Desktop setup and my AI e-commerce experiment. Learning by doing, documenting what works and what doesn’t, building skills that’ll matter long-term.

If you’re a mid-size company: Launch small pilots in non-critical areas. Test multiple approaches rather than betting everything on one platform. Build governance frameworks NOW before you have sprawling deployments that are hard to manage. Focus on use cases where benefits clearly outweigh risks. And get your security team involved from day one.

If you’re an enterprise: You probably have teams evaluating this stuff already. Push them to really understand security implications not just capabilities. Run penetration testing. Build threat models. Don’t rush into production just because competitors are making noise about AI initiatives. Better to be second and safe than first and breached.

For everyone: The organizations that win won’t be the ones that adopt fastest - they’ll be the ones that adopt SMARTLY. Learning from failures while building robust systems that actually work at scale.

THE BIGGER PICTURE: WHAT THIS REALLY MEANS

You know what I find most exciting about all this? We’re watching the formation of fundamental infrastructure in real-time

Like this is similar to what happened with web standards in the late 90s and early 2000s. Competing interests, proprietary advantages, genuine attempts at interoperability, security concerns, everything happening simultaneously.

The question is whether we end up with USB-C for AI - one universal standard that enables ecosystem explosion - or a fragmented landscape where different platforms maintain their own walled gardens.

My bet? Somewhere in between. A layered architecture where different protocols serve different needs, with enough standardization to enable broad interoperability but enough platform-specific advantages that companies can still differentiate.

What’s certain is that AI is moving from isolated chatbots to connected intelligence. The integration wars of 2024-2025 are determining HOW that happens and who controls the infrastructure.

And honestly? That’s pretty exciting to watch unfold. Even if it’s messier than the marketing suggests.

WHERE I’M PLACING MY BETS

You know I believe in building in public and sharing both successes and failures so let me tell you what I’m actually doing with all this

I’m experimenting across multiple platforms. Claude Desktop with MCP and Skills for development work. ChatGPT for quick prototyping and accessing its broader ecosystem. Make.com for orchestration and automation workflows. I’m building my AI experiments in ways that could potentially work across different systems rather than locking into any single platform.

I’m learning the architectures deeply so I understand the tradeoffs. I’m documenting what works and what doesn’t. I’m keeping sensitive data out of experimental systems.

And I’m watching the competitive dynamics closely because the winners and losers in this integration war will shape the AI landscape for years to come.

For my readers thinking about implementing AI in your businesses... this pragmatic middle path is probably smartest right now. Experiment, learn, build expertise. But don’t bet everything on bleeding-edge technology that’s still evolving rapidly.

The transformation IS coming. Just more gradually than the hype suggests.

What’s your take on all this? Are you experimenting with AI integrations? Which platforms are you betting on? Or do you think we’re all getting ahead of ourselves and this whole thing is overhyped? Drop a comment and let’s discuss because I’m genuinely curious how others are thinking about these strategic tradeoffs.

PS. How do you rate today’s email? Leave a comment or “❤️” if you liked the article - I always value your comments and insights, and it also gives me a better position in the Substack network.

Regarding the article, what about data privavy? So well put.