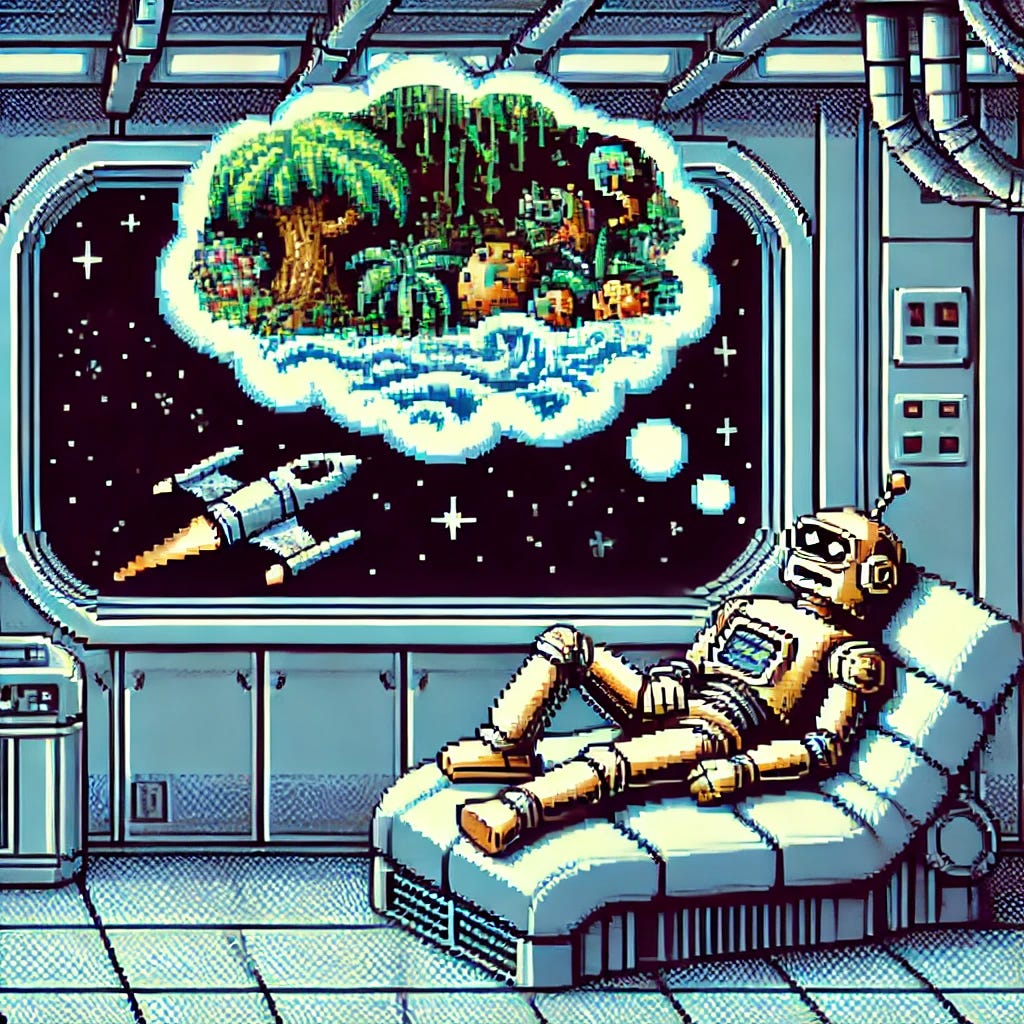

AI Hallucinations as a Feature, Not a Bug: Leveraging Creative Inaccuracies for Breakthrough Innovation

It's a way to get something creative out of LLMs!

Hey digital adventurers! You know what's been keeping me up at night lately? This whole obsession with AI hallucinations as something to be feared, avoided, and eliminated at all costs. Don't get me wrong - I've spent PLENTY of time frustrated when Claude or ChatGPT invents sources or creates plausible-sounding nonsense. But what if we're looking at thi…